filmov

tv

Understanding LLM Inference | NVIDIA Experts Deconstruct How AI Works

Показать описание

In the last eighteen months, large language models (LLMs) have become commonplace. For many people, simply being able to use AI chat tools is enough, but for data and AI practitioners, it is helpful to understand how they work.

In this session, you'll learn how large language models generate words. Our two experts from NVIDIA will present the core concepts of how LLMs work, then you'll see how large scale LLMs are developed. You'll also see how changes in model parameters and settings affect the output.

Key Takeaways:

- Learn how large language models generate text.

- Understand how changing model settings affects output.

- Learn how to choose the right LLM for your use cases.

In this session, you'll learn how large language models generate words. Our two experts from NVIDIA will present the core concepts of how LLMs work, then you'll see how large scale LLMs are developed. You'll also see how changes in model parameters and settings affect the output.

Key Takeaways:

- Learn how large language models generate text.

- Understand how changing model settings affects output.

- Learn how to choose the right LLM for your use cases.

Understanding LLM Inference | NVIDIA Experts Deconstruct How AI Works

Understanding the LLM Inference Workload - Mark Moyou, NVIDIA

How Large Language Models Work

What is AI Inference?

GPUs: Explained

Exploring the Latency/Throughput & Cost Space for LLM Inference // Timothée Lacroix // CTO Mist...

Nvidia CUDA in 100 Seconds

Accelerate Big Model Inference: How Does it Work?

NVLM D 72B - Frontier Multimodal LLM - Rivals GPT-4o and Llama 405B

What are Generative AI models?

GPU VRAM Calculation for LLM Inference and Training

What is Retrieval-Augmented Generation (RAG)?

How ChatGPT Works Technically | ChatGPT Architecture

What is NVIDIA NIM? #ai #ml #llm #Python #artificialintelligence #software #coding #computerscience

Demo: Optimizing Gemma inference on NVIDIA GPUs with TensorRT-LLM

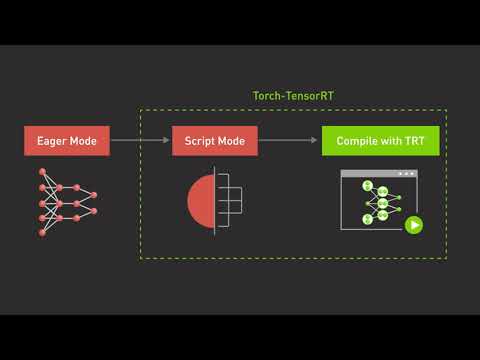

Getting Started with NVIDIA Torch-TensorRT

Deep Dive: Optimizing LLM inference

[1hr Talk] Intro to Large Language Models

All You Need To Know About Running LLMs Locally

Parameters vs Tokens: What Makes a Generative AI Model Stronger? 💪

Getting Started with NVIDIA Triton Inference Server

CPU vs GPU vs TPU explained visually

Mythbusters Demo GPU versus CPU

Demo | LLM Inference on Intel® Data Center GPU Flex Series | Intel Software

Комментарии

0:55:39

0:55:39

0:34:14

0:34:14

0:05:34

0:05:34

0:06:05

0:06:05

0:07:29

0:07:29

0:30:25

0:30:25

0:03:13

0:03:13

0:01:08

0:01:08

0:09:41

0:09:41

0:08:47

0:08:47

0:14:31

0:14:31

0:06:36

0:06:36

0:07:54

0:07:54

0:01:00

0:01:00

0:12:21

0:12:21

0:01:56

0:01:56

0:36:12

0:36:12

![[1hr Talk] Intro](https://i.ytimg.com/vi/zjkBMFhNj_g/hqdefault.jpg) 0:59:48

0:59:48

0:10:30

0:10:30

0:01:31

0:01:31

0:02:43

0:02:43

0:03:50

0:03:50

0:01:34

0:01:34

0:11:30

0:11:30