filmov

tv

Getting Started with NVIDIA Triton Inference Server

Показать описание

Triton Inference Server is an open-source inference solution that standardizes model deployment and enables fast and scalable AI in production. Because of its many features, a natural question to ask is, where do I begin? Watch the video to find out!

#ai #inference #nvidiatriton

#ai #inference #nvidiatriton

Getting Started with NVIDIA Triton Inference Server

How to Deploy HuggingFace’s Stable Diffusion Pipeline with Triton Inference Server

NVIDIA DeepStream Technical Deep Dive: DeepStream Inference Options with Triton & TensorRT

Production Deep Learning Inference with NVIDIA Triton Inference Server

NVIDIA Triton Inference Server: Generative Chemical Structures

Optimizing Model Deployments with Triton Model Analyzer

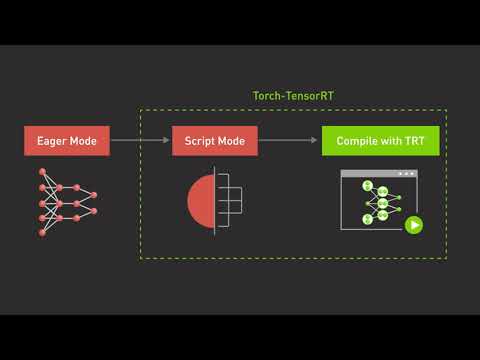

Getting Started with NVIDIA Torch-TensorRT

Deploy a model with #nvidia #triton inference server, #azurevm and #onnxruntime.

Inferencing Llama 3.1 405B right now for FREE on NVIDIA NIM

Azure Cognitive Service deployment: AI inference with NVIDIA Triton Server | BRKFP04

Development and Deployment of Generative AI with NVIDIA

Top 5 Reasons Why Triton is Simplifying Inference

Optimizing Real-Time ML Inference with Nvidia Triton Inference Server | DataHour by Sharmili

NVIDIA Triton Inference Server and its use in Netflix's Model Scoring Service

Deploying an Object Detection Model with Nvidia Triton Inference Server

2024 Ultimate Windows Gaming Performance Optimization

NVidia TensorRT: high-performance deep learning inference accelerator (TensorFlow Meets)

Deploying an Object Detection Model with Nvidia Triton Inference Server

NVIDIA Triton meets ArangoDB Workshop

Triton Shared Computing Cluster 101 - Getting started on TSCC

Nvidia Triton 101: nvidia triton vs tensorrt?

Triton Inference Server Architecture

Auto-scaling Hardware-agnostic ML Inference with NVIDIA Triton and Arm NN

Deploying an Object Detection Model with Nvidia Triton Inference Server

Комментарии

0:02:43

0:02:43

0:02:46

0:02:46

0:37:50

0:37:50

0:02:46

0:02:46

0:01:23

0:01:23

0:11:39

0:11:39

0:01:56

0:01:56

0:05:09

0:05:09

0:00:47

0:00:47

0:37:11

0:37:11

0:16:51

0:16:51

0:02:00

0:02:00

1:07:45

1:07:45

0:32:27

0:32:27

0:24:40

0:24:40

1:07:21

1:07:21

0:08:07

0:08:07

0:24:40

0:24:40

1:59:41

1:59:41

1:41:41

1:41:41

0:02:43

0:02:43

0:03:24

0:03:24

0:25:17

0:25:17

0:24:40

0:24:40