filmov

tv

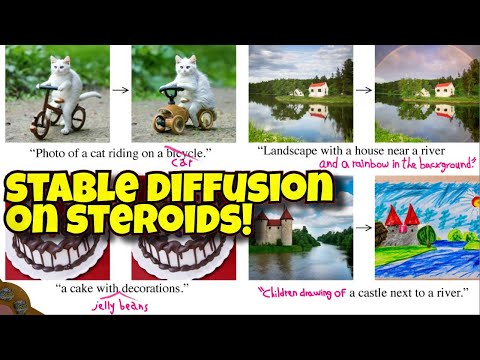

Step-by-Step Stable Diffusion with Python [LCM, SDXL Turbo, StreamDiffusion, ControlNet, Real-Time]

Показать описание

In this step-by-step tutorial for absolute beginners, I will show you how to install everything you need from scratch: create Python environments in MacOS, Windows and Linux, generate real-time stable diffusion images from a webcam, use 3 different methods including Latent Consistency Models (LCMs) with ControlNet, SDXL Turbo, and StreamDiffusion. We will use controlnet, image2image, or essentially image2video in 1 or 2 inference steps (essentially real-time!) We will learn how to create a hack to generate faster with Macbooks that have MPS, computers with CUDA GPUs, and even lower-end computers that only have CPU power! Get ready to generate AI for free without MidJourney without DALL-E and without any third-party website! While Automatic1111 and ComfyUI are amazing programs, in this tutorial, we will learn how to do everything with code – so if you like to hack, then this is FOR YOU – but, even if you are used to Automatic1111 and ComfyUI, this will be a great tutorial to learn how to do things through code! Go crazy with latent diffusion and run with what you learn and build your own projects. All code is FREE and downloadable!

**CODE FROM TUTORIAL**

** WINDOWS CUDA SPECIFIC TUTORIAL (5 MIN TUTORIAL)

** CHAPTERS **

00:00 - Demo and Overview

00:44 - Automatic1111 and ComfyUI vs Python Code

01:07 - Overview of LCM, SDXL Turbo and StreamDiffusion

01:46 - Get Inspired, Proyector Demo, and Ideas

02:23 - Quick Visual Explanation of Diffusion Models

03:24 - How To Download: GIT LFS, VS Code (or text editor), Python

04:44 - Creating Python Environment for LCM and SDXL Turbo

05:22 - Creating Python Environment for StreamDiffusion

07:28 - Stable Diffusion Models Installation

09:59 - Webcam code overview

14:55 - LCM, Controlnet and SDXL Turbo code overview

24:20 - Running the LCM Demo

26:41 - Running SDXL Turbo Demo

28:26 - Activating StreamDiffusion venv

29:11 - StreamDiffusion code overview

29:29 - StreamDiffusion with CUDA

30:04 - StreamDiffusion MPS hack

31:45 - StreamDiffusion script prep

32:27 - Running StreamDiffusion Demo 1

33:17 - Running StreamDiffusion Demo 2

34:20 - Running StreamDiffusion Demo 2 adding props

35:12 - Running StreamDiffusion Demo 3

35:31 - Running StreamDiffusion Demo 3 adding props

36:06 - BONUS: How To Do More Cool AI

**DOWNLOAD LINKS**

Install Python 3.11.6 for the LCM and SDXL Turbo

Install Python 3.10.11 for streamdiffusion

For Windows, to check which Python versions you have in command prompt:

"py -0"

** CREATING ENVIRONMENTS IN WINDOWS **

[Consider changing the directory you install the environments to one that is not in C. Change to different drive with something like “d:” and then “cd (your username)\Documents]

For LCM and SDXL Turbo:

"py -3.11 -m venv lcm_sdxlturbo_venv"

For StreamDiffusion:

"py -3.10 -m venv streamdiffusion_venv"

To activate:

For LCM and SDXL Turbo:

For StreamDiffusion:

***QUICK COMMANDS***

Ctrl + c on Mac to quit the program running

Ctrl + c on Windows to quit program (if this doesn’t work, try ctrl + pause/break)

“ls” to list files on Mac and “dir” to list files on Windows

***MODELS***

***BOOK ON HOW TO USE AI FOR HACKERS AND MAKERS***

PRE-ORDER DIY AI BOOK by my brothers and me!:

The book goes into deeper explanations of how latent diffusion works, how to write modular code and how to get it to work smoothly on any system. So pre-order to have a ton of detailed and explained resources for anything AI!

Camera to AI with python

Realtime image2video

Realtime image to video

Video to video

Single step inference

1 step inference stable diffusion

stable diffusion image2image

realtime stable diffusion

Stable diffusion sdxl turbo

Stable diffusion LCM

Stable diffusion streamdiffusion

Super fast generative ai

Huggingface diffusers

Stable diffusion controlnet

How to animate with diffusers

Animatediff control net

Animatediff animation

Stable Diffusion animation

comfyui animation

animatediff stable diffusion

Controlnet animation

how to use animatediff

animation with animate diff comfyui

how to animate in comfy ui

prompt stable diffusion

animatediff comfyui video to video

animatediff comfyui install

animatediff comfyui img2img

animatediff vid2vid comfyui

DreamShaper

Epic Realism

comfyui-animatediff-evolved

animatediff controlnet animation in comfyui

**CODE FROM TUTORIAL**

** WINDOWS CUDA SPECIFIC TUTORIAL (5 MIN TUTORIAL)

** CHAPTERS **

00:00 - Demo and Overview

00:44 - Automatic1111 and ComfyUI vs Python Code

01:07 - Overview of LCM, SDXL Turbo and StreamDiffusion

01:46 - Get Inspired, Proyector Demo, and Ideas

02:23 - Quick Visual Explanation of Diffusion Models

03:24 - How To Download: GIT LFS, VS Code (or text editor), Python

04:44 - Creating Python Environment for LCM and SDXL Turbo

05:22 - Creating Python Environment for StreamDiffusion

07:28 - Stable Diffusion Models Installation

09:59 - Webcam code overview

14:55 - LCM, Controlnet and SDXL Turbo code overview

24:20 - Running the LCM Demo

26:41 - Running SDXL Turbo Demo

28:26 - Activating StreamDiffusion venv

29:11 - StreamDiffusion code overview

29:29 - StreamDiffusion with CUDA

30:04 - StreamDiffusion MPS hack

31:45 - StreamDiffusion script prep

32:27 - Running StreamDiffusion Demo 1

33:17 - Running StreamDiffusion Demo 2

34:20 - Running StreamDiffusion Demo 2 adding props

35:12 - Running StreamDiffusion Demo 3

35:31 - Running StreamDiffusion Demo 3 adding props

36:06 - BONUS: How To Do More Cool AI

**DOWNLOAD LINKS**

Install Python 3.11.6 for the LCM and SDXL Turbo

Install Python 3.10.11 for streamdiffusion

For Windows, to check which Python versions you have in command prompt:

"py -0"

** CREATING ENVIRONMENTS IN WINDOWS **

[Consider changing the directory you install the environments to one that is not in C. Change to different drive with something like “d:” and then “cd (your username)\Documents]

For LCM and SDXL Turbo:

"py -3.11 -m venv lcm_sdxlturbo_venv"

For StreamDiffusion:

"py -3.10 -m venv streamdiffusion_venv"

To activate:

For LCM and SDXL Turbo:

For StreamDiffusion:

***QUICK COMMANDS***

Ctrl + c on Mac to quit the program running

Ctrl + c on Windows to quit program (if this doesn’t work, try ctrl + pause/break)

“ls” to list files on Mac and “dir” to list files on Windows

***MODELS***

***BOOK ON HOW TO USE AI FOR HACKERS AND MAKERS***

PRE-ORDER DIY AI BOOK by my brothers and me!:

The book goes into deeper explanations of how latent diffusion works, how to write modular code and how to get it to work smoothly on any system. So pre-order to have a ton of detailed and explained resources for anything AI!

Camera to AI with python

Realtime image2video

Realtime image to video

Video to video

Single step inference

1 step inference stable diffusion

stable diffusion image2image

realtime stable diffusion

Stable diffusion sdxl turbo

Stable diffusion LCM

Stable diffusion streamdiffusion

Super fast generative ai

Huggingface diffusers

Stable diffusion controlnet

How to animate with diffusers

Animatediff control net

Animatediff animation

Stable Diffusion animation

comfyui animation

animatediff stable diffusion

Controlnet animation

how to use animatediff

animation with animate diff comfyui

how to animate in comfy ui

prompt stable diffusion

animatediff comfyui video to video

animatediff comfyui install

animatediff comfyui img2img

animatediff vid2vid comfyui

DreamShaper

Epic Realism

comfyui-animatediff-evolved

animatediff controlnet animation in comfyui

Комментарии

0:12:37

0:12:37

0:12:57

0:12:57

1:00:42

1:00:42

0:18:04

0:18:04

0:37:26

0:37:26

0:03:42

0:03:42

0:08:11

0:08:11

0:08:44

0:08:44

5:03:32

5:03:32

0:03:45

0:03:45

0:21:59

0:21:59

0:08:34

0:08:34

0:10:28

0:10:28

0:15:30

0:15:30

0:07:06

0:07:06

0:04:47

0:04:47

0:14:03

0:14:03

0:17:50

0:17:50

0:13:07

0:13:07

0:07:41

0:07:41

0:09:02

0:09:02

0:03:53

0:03:53

0:22:27

0:22:27

0:07:01

0:07:01