filmov

tv

Scott Clark - Using Bayesian Optimization to Tune Machine Learning Models - MLconf SF 2016

Показать описание

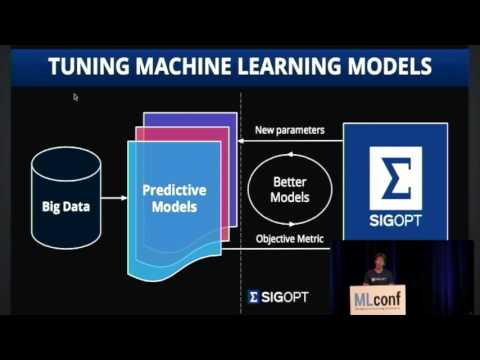

Using Bayesian Optimization to Tune Machine Learning Models: In this talk we briefly introduce Bayesian Global Optimization as an efficient way to optimize machine learning model parameters, especially when evaluating different parameters is time-consuming or expensive. We will motivate the problem and give example applications.

We will also talk about our development of a robust benchmark suite for our algorithms including test selection, metric design, infrastructure architecture, visualization, and comparison to other standard and open source methods. We will discuss how this evaluation framework empowers our research engineers to confidently and quickly make changes to our core optimization engine.

We will end with an in-depth example of using these methods to tune the features and hyperparameters of a real world problem and give several real world applications.

Using Bayesian Optimization to Tune Deep Learning Pipelines by Scott Clark

Scott Clark - Using Bayesian Optimization to Tune Machine Learning Models - MLconf SF 2016

Bayesian Optimization for Hyperparameter Tuning with Scott Clark - #50

data.bythebay.io: Scott Clark, Optimizing Machine Learning Models

Scott Clark, CEO & Co-Founder, SigOpt at MLconf Seattle 2017

Boost AI Experimentation to Design, Explore, and Optimize Your Models: Summit Keynote, Scott Clark

CAM Colloquium - Scott Clark (12/6/19)

Automated Model Tuning with SigOpt - Democast #2

Building the Better, More Scalable Algorithms with SigOpt’s Scott Clark

Automated Performance Tuning with Bayesian Optimization

Tuning for Systematic Trading: Intuition Behind Bayesian Optimization

Ai4 Finance - Matt Greenwood & Scott Clark

O'Reilly AI 2019: Best Practices for Scaling Modeling Platforms

EuroSciPy 2017: Bayesian Optimization - Can you do better than randomly guessing parameters?

Comparing Bayesian Optimization to Genetic Algorithms with SigOpt

Critical Capabilities of Optimization in the Enterprise

AI for AI | How Bayesian optimisation works

Tuning 2.0: Advanced Optimization Techniques Webinar

Bayesian optimization and its applications for autonomous vehicles

Is Jeff Bezos Really That Approachable #wealth #jeffbezos #celebrity #entrepreneur #ceo

Bayesian Optimization in the Wild: Risk-Averse Decisions and Budget Constraints

Bayesian Optimization

Modeling at Scale in Algorithmic Trading with SigOpt

CMSV-TOCS: Scott Clark 2013-04-09

Комментарии

0:43:22

0:43:22

0:23:43

0:23:43

0:49:31

0:49:31

0:41:29

0:41:29

0:25:57

0:25:57

0:41:01

0:41:01

1:16:55

1:16:55

0:46:18

0:46:18

0:35:27

0:35:27

0:40:50

0:40:50

0:33:27

0:33:27

0:21:58

0:21:58

0:37:49

0:37:49

0:28:48

0:28:48

0:01:40

0:01:40

0:51:43

0:51:43

0:02:51

0:02:51

0:45:47

0:45:47

0:47:58

0:47:58

0:00:12

0:00:12

0:56:38

0:56:38

0:00:17

0:00:17

0:24:05

0:24:05

0:45:51

0:45:51