filmov

tv

Linear Algebra Derivative

Показать описание

In this video, I'm do something really cool! I calculate the derivative of a function without using calculus, but using linear algebra instead. Well, almost without calculus, since I still need to know the derivatives of 1, x, x^2. This little exercise is a beautiful illustration of the interplay between linear algebra and calculus, and is probably how your calculators use linear algebra to calculate derivatives. Enjoy!

Derivative of a Matrix : Data Science Basics

Linear Algebra Derivative

Matrix Derivatives: What's up with all those transposes ?

Gilbert Strang: Linear Algebra vs Calculus

260 - [ENG] derivative of xT A x quadratic form

Linear Algebra 19u: The Derivative Operator Is Defective

What is Jacobian? | The right way of thinking derivatives and integrals

Linear Algebra 15h: The Derivative as a Linear Transformation

Germany | Can you solve this ? | A Nice Algebra Problem | Mathematics Olympiad

Visual derivative of x squared

The Hessian matrix | Multivariable calculus | Khan Academy

Doing calculus with a matrix!

Linear transformations and matrices | Chapter 3, Essence of linear algebra

How REAL Men Integrate Functions

Difference Between Partial and Total Derivative

Abstract vector spaces | Chapter 16, Essence of linear algebra

Differential equations, a tourist's guide | DE1

The Derivative is Linear Calculus 1

5 simple unsolvable equations

Eigenvectors and eigenvalues | Chapter 14, Essence of linear algebra

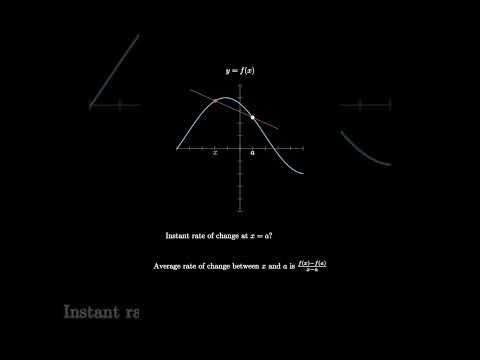

Visual Derivative Definition!

MIT Entrance Exam Problem from 1869 #Shorts #math #maths #mathematics #problem #MIT

Advanced Linear Algebra 8: The Half Derivative

Chain Rule Method of Differentiation | Derivatives

Комментарии

0:13:43

0:13:43

0:12:13

0:12:13

0:14:53

0:14:53

0:02:14

0:02:14

![260 - [ENG]](https://i.ytimg.com/vi/oO5c3KNPnK0/hqdefault.jpg) 0:02:56

0:02:56

0:05:18

0:05:18

0:27:14

0:27:14

0:16:10

0:16:10

0:08:56

0:08:56

0:00:58

0:00:58

0:06:10

0:06:10

0:13:21

0:13:21

0:10:59

0:10:59

0:00:35

0:00:35

0:01:44

0:01:44

0:16:46

0:16:46

0:27:16

0:27:16

0:03:49

0:03:49

0:00:50

0:00:50

0:17:16

0:17:16

0:01:00

0:01:00

0:00:48

0:00:48

0:42:51

0:42:51

0:06:01

0:06:01