filmov

tv

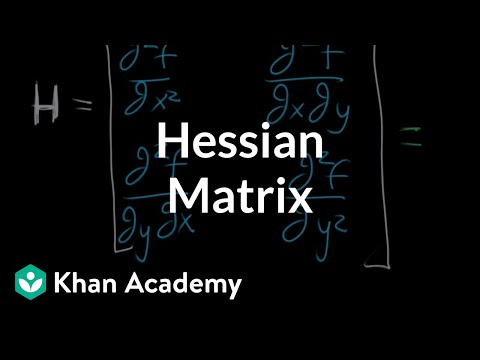

The Hessian matrix | Multivariable calculus | Khan Academy

Показать описание

The Hessian matrix is a way of organizing all the second partial derivative information of a multivariable function.

The Hessian matrix | Multivariable calculus | Khan Academy

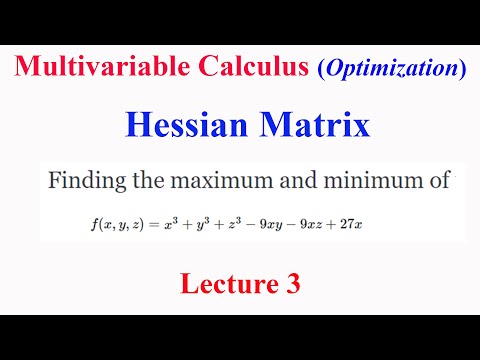

Multivariable Calculus: Lecture 3 Hessian Matrix : Optimization for a three variable function

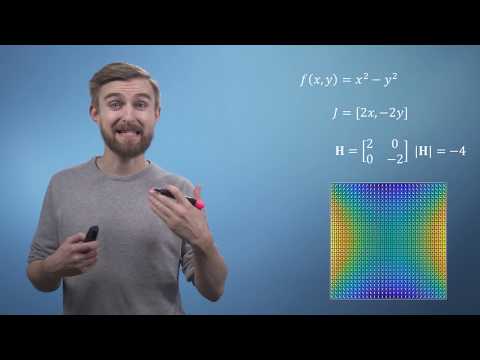

M4ML - Multivariate Calculus - 2.7 The Hessian

Multi-variable Optimization & the Second Derivative Test

261.10.7 EXTRA: The Hessian, or Why the Second Derivative Test Works

HESSIAN METHOD :Optimisation of Multivariable function #HESSIANMatrix #optimisation #NET #GATE

Classifying critical points using the Hessian determinant

Multivariable Second Derivative Test Hessian

Hessian matrix of a function

Math Multivariable calculus - The Hessian matrix

optimization of three variable function using Hessian Method unconstrained optimisation problem

Linear Algebra: Hessian Matrix

Local Extrema, Critical Points, & Saddle Points of Multivariable Functions - Calculus 3

Hessian Matrix of a function and its Examples

The Hessian matrix

The Hessian Matrix for 2nd Order Derivatives (shortened video)

The Hessian matrix and local extrema for f(x,y)

Hessian

Math 2374 Lecture 23C: The Hessian matrix

MAT 232: Construction of Hessian Matrix

Multivariable Calculus 17 | Taylor's Theorem - Examples

Second order Partial Derivative and hessian matrix of a Multivariable function

Multivariate Calculus | 7d. Hessian Matrix Introduction.

Hessian Matrix

Комментарии

0:06:10

0:06:10

0:07:11

0:07:11

0:05:40

0:05:40

0:13:36

0:13:36

0:09:32

0:09:32

0:12:05

0:12:05

0:06:12

0:06:12

0:02:17

0:02:17

0:00:42

0:00:42

0:06:10

0:06:10

0:21:07

0:21:07

0:03:49

0:03:49

0:14:35

0:14:35

0:11:12

0:11:12

0:06:10

0:06:10

0:04:13

0:04:13

0:29:21

0:29:21

0:04:37

0:04:37

0:05:17

0:05:17

0:03:02

0:03:02

0:14:07

0:14:07

0:12:37

0:12:37

0:04:21

0:04:21

0:00:31

0:00:31