filmov

tv

Memorize this Array Time Complexity Cheat Sheet!

Показать описание

dynamic programming, leetcode, coding interview question, data structures, data structures and algorithms, faang

Memorize this Array Time Complexity Cheat Sheet!

Array Data Structure Tutorial - Array Time Complexity

Calculating Time Complexity | Data Structures and Algorithms| GeeksforGeeks

LeetCode is a JOKE with This ONE WEIRD TRICK

Fastest way to learn Data Structures and Algorithms

LeetCode was HARD until I Learned these 15 Patterns

8 patterns to solve 80% Leetcode problems

3 Tips I’ve learned after 2000 hours of Leetcode

DSA In Java || Sorting Algorithms in Java || Coders Arcade

Quick Guide: Time Complexity Analysis in Under 1 Minute!

Time & Space Complexity Cheatsheet for Sorting Algorithms

How to Start Leetcode (as a beginner)

Big-O Notation - For Coding Interviews

Sorting Algorithms Explained Visually

L-1.6: Time Complexities of all Searching and Sorting Algorithms in 10 minute | GATE & other Exa...

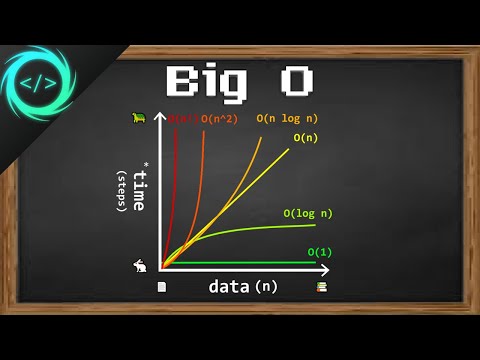

Learn Big O notation in 6 minutes 📈

I gave 127 interviews. Top 5 Algorithms they asked me.

Students in first year.. 😂 | #shorts #jennyslectures #jayantikhatrilamba

Struggle with Time Complexity? Watch this.

How I Remember ALGORITHMS | PLACEMENT PREPARATION

Memoization: The TRUE Way To Optimize Your Code In Python

My Brain after 569 Leetcode Problems

Time Complexity for Coding Interviews | Big O Notation Explained | Data Structures & Algorithms

1.11 Best Worst and Average Case Analysis

Комментарии

0:00:43

0:00:43

0:06:32

0:06:32

0:08:05

0:08:05

0:04:54

0:04:54

0:08:42

0:08:42

0:13:00

0:13:00

0:07:30

0:07:30

0:00:44

0:00:44

1:11:49

1:11:49

0:00:49

0:00:49

0:00:28

0:00:28

0:08:45

0:08:45

0:20:38

0:20:38

0:09:01

0:09:01

0:12:52

0:12:52

0:06:25

0:06:25

0:08:36

0:08:36

0:00:11

0:00:11

0:09:50

0:09:50

0:08:14

0:08:14

0:07:32

0:07:32

0:07:50

0:07:50

0:41:19

0:41:19

0:18:56

0:18:56