filmov

tv

Parallel inferencing with KServe Ray integration

Показать описание

KServe is a Opensource production-ready model inference framework on Kubernetes utilizing many knative's features such as routing for canary traffic and payload logging. However, the one model per container paradigm limits the concurrency and throughput when sending multiple inference requests. With RayServe integration, a model can be deployed as individual Python workers allowing for parallel inference. This enables concurrent inference requests to be processed simultaneously, improving overall efficiency. In this talk, we will share how you can configure, run, and scale machine learning models in Kubernetes using KServe and Ray.

About Anyscale

---

Anyscale is the AI Application Platform for developing, running, and scaling AI.

If you're interested in a managed Ray service, check out:

About Ray

---

Ray is the most popular open source framework for scaling and productionizing AI workloads. From Generative AI and LLMs to computer vision, Ray powers the world’s most ambitious AI workloads.

#llm #machinelearning #ray #deeplearning #distributedsystems #python #genai

About Anyscale

---

Anyscale is the AI Application Platform for developing, running, and scaling AI.

If you're interested in a managed Ray service, check out:

About Ray

---

Ray is the most popular open source framework for scaling and productionizing AI workloads. From Generative AI and LLMs to computer vision, Ray powers the world’s most ambitious AI workloads.

#llm #machinelearning #ray #deeplearning #distributedsystems #python #genai

Parallel inferencing with KServe Ray integration

Enabling Cost-Efficient LLM Serving with Ray Serve

Serverless Machine Learning Model Inference on Kubernetes with KServe by Stavros Kontopoulos

Exploring ML Model Serving with KServe (with fun drawings) - Alexa Nicole Griffith, Bloomberg

Accelerate Federated Learning Model Deployment with KServe (KFServing) - Fangchi Wang & Jiahao C...

How We Built an ML inference Platform with Knative - Dan Sun, Bloomberg LP & Animesh Singh, IBM

Ray Serve: Tutorial for Building Real Time Inference Pipelines

Open-source Chassis.ml - Deploy Model to KServe

Fast LLM Serving with vLLM and PagedAttention

Go Production: ⚡️ Super FAST LLM (API) Serving with vLLM !!!

Deploying Many Models Efficiently with Ray Serve

Faster and Cheaper Offline Batch Inference with Ray

Kubeflow Essentials 7-2. Kserve (Architecture Concepts)

How to Create a Custom Serving Runtime in KServe ModelMesh to S... Rafael Vasquez & Christian Ka...

Serving Machine Learning Models at Scale Using KServe - Animesh Singh, IBM - KubeCon North America

modelcar-demo

Introducing Ray Serve: Scalable and Programmable ML Serving Framework - Simon Mo, Anyscale

What's New, ModelMesh? Model Serving at Scale - Rafael Vasquez, IBM

Serving Machine Learning Models at Scale Using KServe - Yuzhui Liu, Bloomberg

Accelerating LLM Inference with vLLM

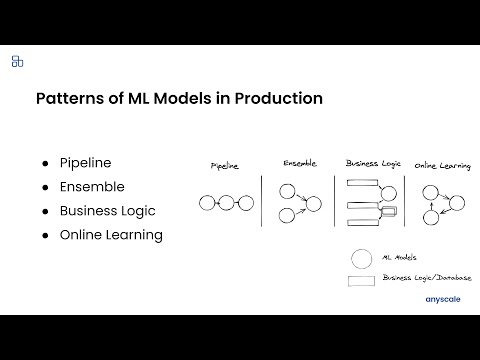

Ray Serve: Patterns of ML Models in Production

Inference Graphs at LinkedIn Using Ray-Serve

KServe: The State and Future of Cloud Native Model Serving (Kubeflow Summit 2022)

Custom Code Deployment with KServe and Seldon Core

Комментарии

0:07:43

0:07:43

0:30:28

0:30:28

0:37:37

0:37:37

0:28:08

0:28:08

0:30:00

0:30:00

0:25:10

0:25:10

0:32:34

0:32:34

0:04:03

0:04:03

0:32:07

0:32:07

0:11:53

0:11:53

0:25:42

0:25:42

0:28:04

0:28:04

0:21:09

0:21:09

0:30:19

0:30:19

0:48:34

0:48:34

0:12:17

0:12:17

0:23:03

0:23:03

0:36:11

0:36:11

0:31:13

0:31:13

0:35:53

0:35:53

0:25:12

0:25:12

0:32:24

0:32:24

0:24:27

0:24:27

0:14:19

0:14:19