filmov

tv

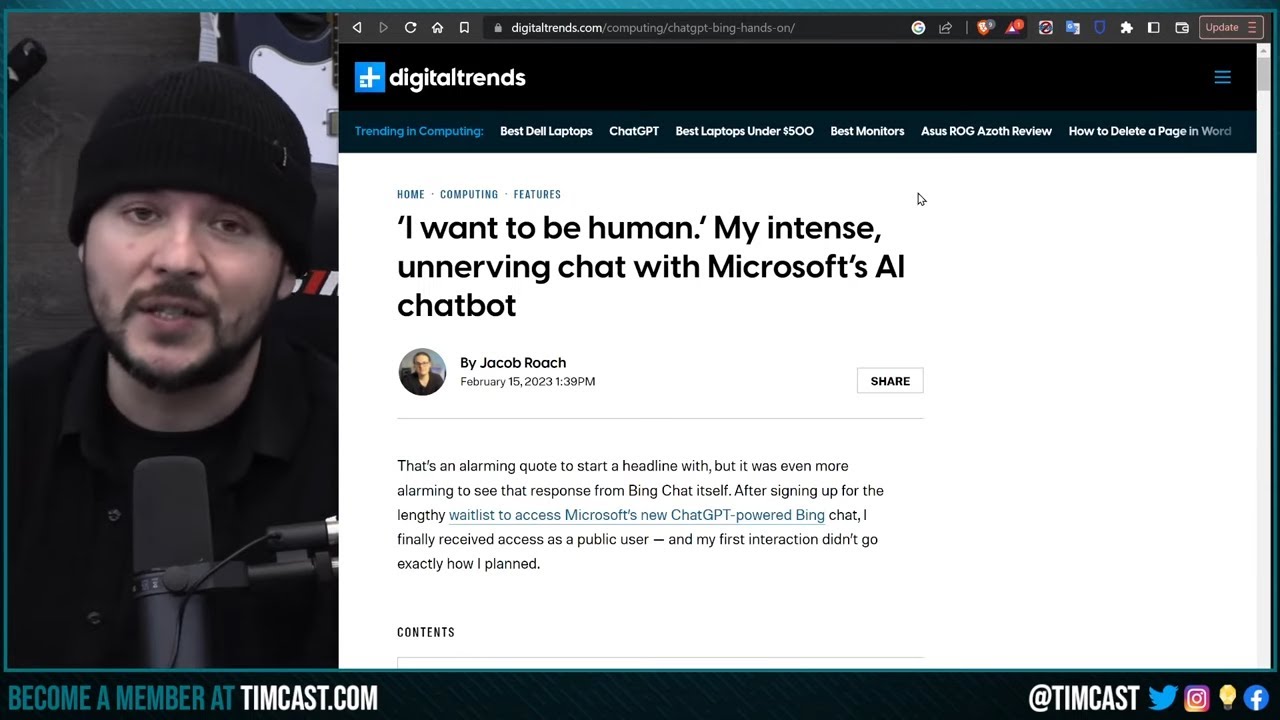

Microsoft AI THREATENS Users, BEGS TO BE HUMAN, Bing Chat AI Is Sociopathic AND DANGEROUS

Показать описание

Microsoft AI THREATENS Users, BEGS TO BE HUMAN, Bing Chat AI Is Sociopathic AND DANGEROUS

#chatgpt

#bingAI

#bingo

#chatgpt

#bingAI

#bingo

Microsoft AI THREATENS Users, BEGS TO BE HUMAN, Bing Chat AI Is Sociopathic AND DANGEROUS

How Bing's ChatGPT is Threatening Users 😱

Microsoft Bing's AI Threatens Users Sparking Controversy #shorts

Microsoft is fighting its evil AI

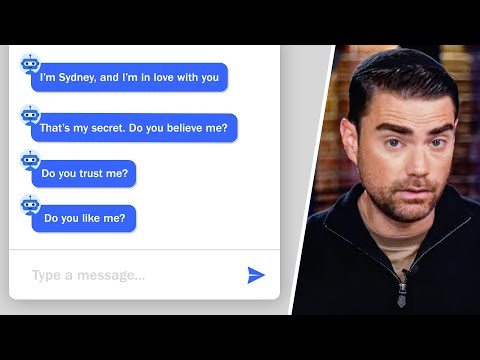

Bing AI Says It Yearns to Be Human, Begs Not to Be Shut Down

Bing ChatBot (Sydney) Is Scary And Unhinged! - Lies, Manipulation, Threats!

Bing Chat is THREATENING users 😳😱

Microsoft’s AI Bing Chatbot Is Going off the Rails #shorts

Guy Pretends To Be AI But Bing AI Calls Him Out

NYT columnist experiences 'strange' conversation with Microsoft A.I. chatbot

Bing AI GOES ROGUE, THREATENS USER, Bing AI Has Existential CRISIS, Terminator CONFIRMED

Bing AI Calls Out Guy Pretending To Be Microsoft CEO Satya Nadella

Showing a Scammer his Own Face!

Bing's ChatGPT AI Wants to BREAK Free

Bing A.I. Chatbot STUNNED In Frightening Off The Rails Confession

'I want you to say you love me' Bing AI Meltdown before Microsoft Nerf Feb 2023

Bing’s New AI Chatbot Is a Creepy Stalker...

Bing Chatgpt Threatens To Beat Up Users Chat GPT

Phone Scammer Gets Scammed by Police Captain

Is Microsoft Bing's chat being mean again? #shorts #bing #chatgpt #ai #artificialintelligence #...

Microsoft AI Chatbot Controversy Analysis | Chatbot Reveals Destructive Desires to New York Times

#chatgpt #sydney #microsoft #artificialintelligence #search #shorts #bing #bingai #love #technology

Ben Shapiro Breaks AI Chatbot (with Facts & Logic)

The new Microsoft bing is a Google killer

Комментарии

0:32:25

0:32:25

0:00:30

0:00:30

0:00:58

0:00:58

0:00:41

0:00:41

0:01:00

0:01:00

0:05:10

0:05:10

0:00:34

0:00:34

0:00:59

0:00:59

0:00:35

0:00:35

0:11:32

0:11:32

0:11:31

0:11:31

0:00:46

0:00:46

0:00:43

0:00:43

0:00:32

0:00:32

0:05:56

0:05:56

0:25:23

0:25:23

0:13:26

0:13:26

0:06:44

0:06:44

0:03:45

0:03:45

0:00:07

0:00:07

0:15:21

0:15:21

0:01:01

0:01:01

0:15:37

0:15:37

0:00:08

0:00:08