filmov

tv

CUDA Explained - Why Deep Learning uses GPUs

Показать описание

💡Enroll to gain access to the full course:

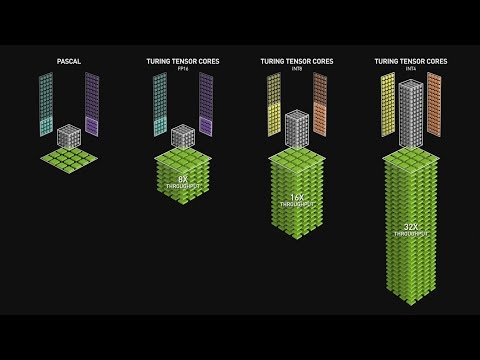

Artificial intelligence with PyTorch and CUDA. Let's discuss how CUDA fits in with PyTorch, and more importantly, why we use GPUs in neural network programming.

🕒🦎 VIDEO SECTIONS 🦎🕒

00:30 Help deeplizard add video timestamps - See example in the description

13:03 Collective Intelligence and the DEEPLIZARD HIVEMIND

💥🦎 DEEPLIZARD COMMUNITY RESOURCES 🦎💥

👋 Hey, we're Chris and Mandy, the creators of deeplizard!

👉 Check out the website for more learning material:

💻 ENROLL TO GET DOWNLOAD ACCESS TO CODE FILES

🧠 Support collective intelligence, join the deeplizard hivemind:

🧠 Use code DEEPLIZARD at checkout to receive 15% off your first Neurohacker order

👉 Use your receipt from Neurohacker to get a discount on deeplizard courses

👀 CHECK OUT OUR VLOG:

❤️🦎 Special thanks to the following polymaths of the deeplizard hivemind:

Tammy

Mano Prime

Ling Li

🚀 Boost collective intelligence by sharing this video on social media!

👀 Follow deeplizard:

🎓 Deep Learning with deeplizard:

🎓 Other Courses:

🛒 Check out products deeplizard recommends on Amazon:

🎵 deeplizard uses music by Kevin MacLeod

❤️ Please use the knowledge gained from deeplizard content for good, not evil.

Artificial intelligence with PyTorch and CUDA. Let's discuss how CUDA fits in with PyTorch, and more importantly, why we use GPUs in neural network programming.

🕒🦎 VIDEO SECTIONS 🦎🕒

00:30 Help deeplizard add video timestamps - See example in the description

13:03 Collective Intelligence and the DEEPLIZARD HIVEMIND

💥🦎 DEEPLIZARD COMMUNITY RESOURCES 🦎💥

👋 Hey, we're Chris and Mandy, the creators of deeplizard!

👉 Check out the website for more learning material:

💻 ENROLL TO GET DOWNLOAD ACCESS TO CODE FILES

🧠 Support collective intelligence, join the deeplizard hivemind:

🧠 Use code DEEPLIZARD at checkout to receive 15% off your first Neurohacker order

👉 Use your receipt from Neurohacker to get a discount on deeplizard courses

👀 CHECK OUT OUR VLOG:

❤️🦎 Special thanks to the following polymaths of the deeplizard hivemind:

Tammy

Mano Prime

Ling Li

🚀 Boost collective intelligence by sharing this video on social media!

👀 Follow deeplizard:

🎓 Deep Learning with deeplizard:

🎓 Other Courses:

🛒 Check out products deeplizard recommends on Amazon:

🎵 deeplizard uses music by Kevin MacLeod

❤️ Please use the knowledge gained from deeplizard content for good, not evil.

Комментарии

0:13:33

0:13:33

0:03:13

0:03:13

0:19:11

0:19:11

0:01:34

0:01:34

0:11:39

0:11:39

0:07:29

0:07:29

0:03:47

0:03:47

0:41:15

0:41:15

0:29:07

0:29:07

0:07:40

0:07:40

0:01:00

0:01:00

0:15:32

0:15:32

0:00:59

0:00:59

0:13:56

0:13:56

0:03:40

0:03:40

0:19:30

0:19:30

0:19:37

0:19:37

0:05:18

0:05:18

0:02:39

0:02:39

0:06:39

0:06:39

0:39:04

0:39:04

0:41:14

0:41:14

0:09:00

0:09:00

0:00:25

0:00:25