filmov

tv

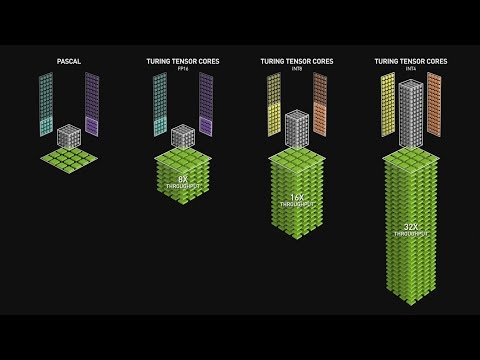

What are Tensor Cores?

Показать описание

▶ Our studio gear on Amazon:

Subscribe to our channel!

MUSIC:

'Orion' by Sundriver

Provided by Silk Music

What are Tensor Cores?

Tensor Cores in a Nutshell

What are Tensor Cores?

Zen, CUDA, and Tensor Cores - Part 1

NVIDIA Tensor Cores Programming

Analysis of a Tensor Core

This is How Tensor Cores Destroy Laptop NPUs

What Is A Tensor Core In Simple Terms? - The Hardware Hub

Inside a GPU: How Your Graphics Card Powers Games and AI

CUDA Cores vs Tensor Cores: Key Differences Explained

Nvidia CUDA in 100 Seconds

Machine Learning Without Tensor Cores - How PS5 Pro Does It!

Funktionsweise der Tensor Cores

Nvidia's 50 Series GPUs - A Push for AI Tensor Cores Performance, Upscaling and 'Fake Fram...

Tensors for Neural Networks, Clearly Explained!!!

DGEMM using Tensor Cores, and Its Accurate and Reproducible Versions

Detailed Analysis of Tensor Cores, TPUs

AMD FSR Proves Tensor Cores aren’t for Gamers – How will Nvidia respond?

Nvidia Tensor Core INSANE !!!!!

Photonic Tensor Cores for Machine Learning

Flash LLMs: Tensor Cores

Denis Timonin about AMP/FP16 and Tensor Cores

Everything You Need To Know About CUDA Tensor Cores (98% util)

Tensor Cores What are they?

Комментарии

0:05:18

0:05:18

0:03:40

0:03:40

0:05:19

0:05:19

0:21:06

0:21:06

0:06:54

0:06:54

0:13:42

0:13:42

0:00:43

0:00:43

0:03:01

0:03:01

0:09:50

0:09:50

0:00:57

0:00:57

0:03:13

0:03:13

0:11:43

0:11:43

0:00:37

0:00:37

0:18:38

0:18:38

0:09:40

0:09:40

0:23:27

0:23:27

0:03:40

0:03:40

0:15:05

0:15:05

0:01:55

0:01:55

0:00:22

0:00:22

0:02:04

0:02:04

1:18:52

1:18:52

1:04:50

1:04:50

0:05:00

0:05:00