filmov

tv

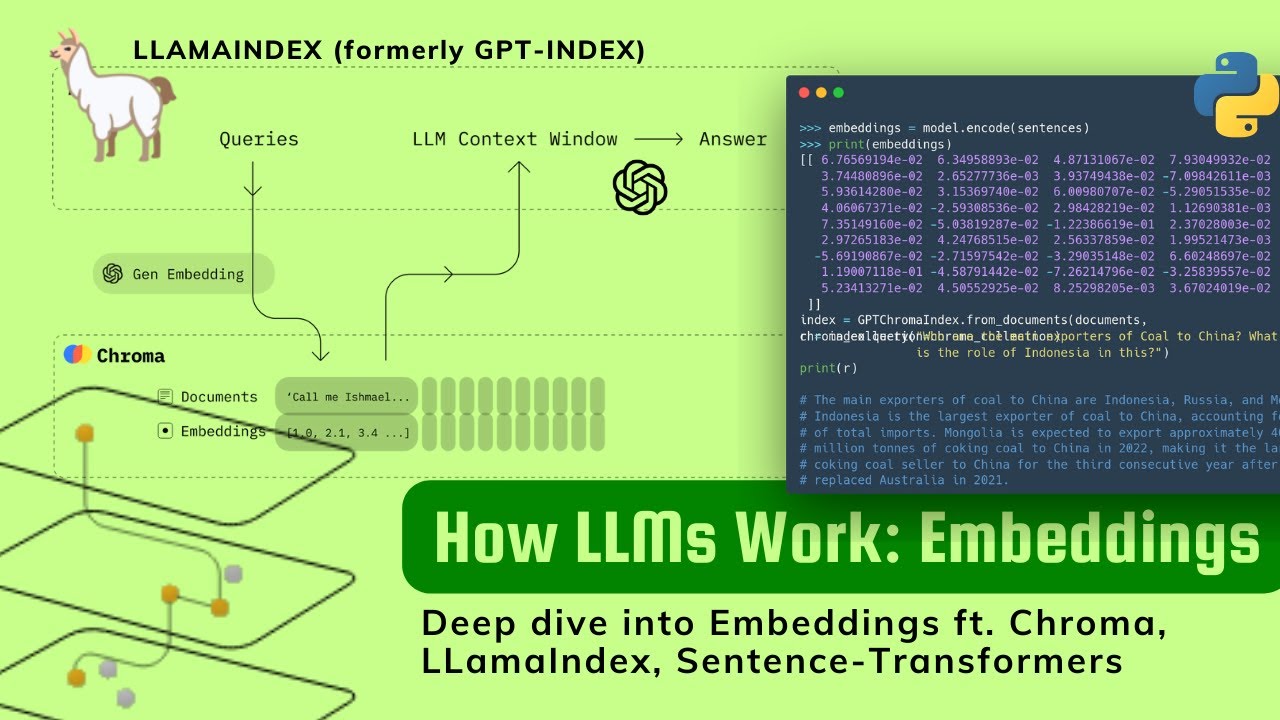

Understanding Embeddings in LLMs (ft LlamaIndex + Chroma db)

Показать описание

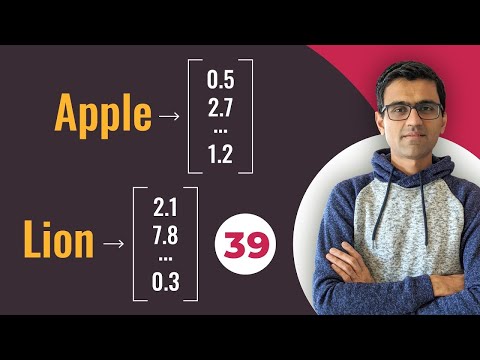

We do a deep dive into one of the most important pieces of LLMs (large language models, like GPT-4, Alpaca, Llama etc): EMBEDDINGS! :) In every langchain or llamaindex tutorial you'll come across this idea, but they can feel quite "rushed" or opaque, so this video presents a deeper look into what embeddings really are, and the role it plays in a world dominated by large language models like GPT.

In Chroma's own words, Embeddings are "the A.I-native way to represent any kind of data, making them the perfect fit for working with all kinds of A.I-powered tools and algorithms. They can represent text, images, and soon audio and video. There are many options for creating embeddings, whether locally using an installed library, or by calling an API".

LlamaIndex (GPT Index) is a project that provides a central interface to connect your LLM’s with external data.

LangChain is a fantastic tool for developers looking to build AI systems using the variety of LLMs (large language models, like GPT-4, Alpaca, Llama etc), as it helps unify and standardize the developer experience in text embeddings, vector stores / databases (like Chroma), and chaining it for downstream applications through agents.

Mentioned in the video:

- Chroma Embeddings database (vector store):

- Watch PART 2 of the LangChain / LLM series:

- Watch PART 3 of the LangChain / LLM series

LangChain + HuggingFace's Inference API (no OpenAI credits required!)

- HuggingFace's Sentence-Transformer model

All the code for the LLM (large language models) series featuring GPT-3, ChatGPT, LangChain, LlamaIndex and more are on my github repository so go and ⭐ star or 🍴 fork it. Happy Coding!

In Chroma's own words, Embeddings are "the A.I-native way to represent any kind of data, making them the perfect fit for working with all kinds of A.I-powered tools and algorithms. They can represent text, images, and soon audio and video. There are many options for creating embeddings, whether locally using an installed library, or by calling an API".

LlamaIndex (GPT Index) is a project that provides a central interface to connect your LLM’s with external data.

LangChain is a fantastic tool for developers looking to build AI systems using the variety of LLMs (large language models, like GPT-4, Alpaca, Llama etc), as it helps unify and standardize the developer experience in text embeddings, vector stores / databases (like Chroma), and chaining it for downstream applications through agents.

Mentioned in the video:

- Chroma Embeddings database (vector store):

- Watch PART 2 of the LangChain / LLM series:

- Watch PART 3 of the LangChain / LLM series

LangChain + HuggingFace's Inference API (no OpenAI credits required!)

- HuggingFace's Sentence-Transformer model

All the code for the LLM (large language models) series featuring GPT-3, ChatGPT, LangChain, LlamaIndex and more are on my github repository so go and ⭐ star or 🍴 fork it. Happy Coding!

Комментарии

0:29:22

0:29:22

0:16:19

0:16:19

0:01:24

0:01:24

0:07:55

0:07:55

0:27:24

0:27:24

0:00:57

0:00:57

0:35:19

0:35:19

0:01:22

0:01:22

0:19:37

0:19:37

0:10:37

0:10:37

0:04:38

0:04:38

0:01:00

0:01:00

0:11:32

0:11:32

0:11:26

0:11:26

0:08:24

0:08:24

0:05:44

0:05:44

0:07:27

0:07:27

0:08:44

0:08:44

0:11:41

0:11:41

1:43:21

1:43:21

0:08:57

0:08:57

0:01:00

0:01:00

1:50:09

1:50:09

0:11:26

0:11:26