filmov

tv

Representations vs Algorithms: Symbols and Geometry in Robotics

Показать описание

Nicholas Roy, MIT

Abstract: In the last few years, the ability for robots to understand and operate in the world around them has advanced considerably. Examples include the growing number of self-driving car systems, the considerable work in robot mapping, and the growing interest in home and service robots. However, one limitation is that robots most often reason and plan using very geometric models of the world, such as point features, dense occupancy grids and action cost maps. To be able to plan and reason over long length and timescales, as well as planning more complex missions, robots need to be able to reason about abstract concepts such as landmarks, segmented objects and tasks (among other representations). I will talk about recent work in joint reasoning about semantic representations and physical representations and what these joint representations mean for planning and decision making.

Abstract: In the last few years, the ability for robots to understand and operate in the world around them has advanced considerably. Examples include the growing number of self-driving car systems, the considerable work in robot mapping, and the growing interest in home and service robots. However, one limitation is that robots most often reason and plan using very geometric models of the world, such as point features, dense occupancy grids and action cost maps. To be able to plan and reason over long length and timescales, as well as planning more complex missions, robots need to be able to reason about abstract concepts such as landmarks, segmented objects and tasks (among other representations). I will talk about recent work in joint reasoning about semantic representations and physical representations and what these joint representations mean for planning and decision making.

Representations vs Algorithms: Symbols and Geometry in Robotics

Algorithm and Flowcharts: Symbols and Examples

Software Flowchart

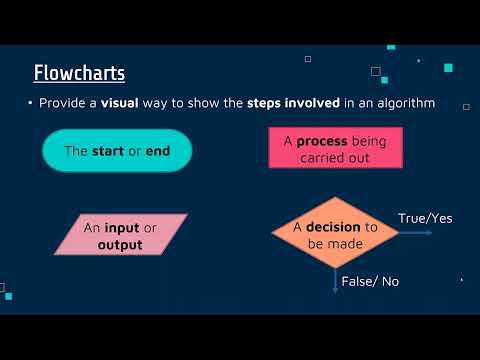

Flowchart definition and symbols

Computer Science Basics: Sequences, Selections, and Loops

Algorithm and Flowchart

Difference between Flowchart and Algorithm | Flowchart Vs Algorithm | Learn Coding

algorithm representation

How to Make Algorithm and Flowchart from a given problem

Flowcharts

Flowchart symbols|flowchart #algorithm #flowchart #youtubeshorts #instareel #viral #viralvideo

Algorithm Vs Flowchart Vs Pseudocode | Difference Between Algorithm And Flowchart | Intellipaat

What is a Flowchart - Flowchart Symbols, Flowchart Types, and More

Algorithm and Flow Chart

Representation of an Algorithm

Is math discovered or invented? - Jeff Dekofsky

How exactly does binary code work? - José Américo N L F de Freitas

Representation of Algorithms ( flowchart & Pseudocode

9) A is a graphical representation of an algorithm. Answer: Page 3 of 9. 10) In a flowchart, the or…...

#01 Flowchart Symbols || Algorithm and Flowchart for Finding the Largest of Two Numbers

Dijkstra's algorithm in 3 minutes

1.8.1 Asymptotic Notations Big Oh - Omega - Theta #1

Flowcharts and Pseudocode - #1 | GCSE (9-1) in Computer Science | AQA, OCR and Edexcel

Senior Programmers vs Junior Developers #shorts

Комментарии

0:48:40

0:48:40

0:11:16

0:11:16

0:02:18

0:02:18

0:01:57

0:01:57

0:02:27

0:02:27

0:56:51

0:56:51

0:05:41

0:05:41

0:34:36

0:34:36

0:05:26

0:05:26

0:05:45

0:05:45

0:00:16

0:00:16

0:07:25

0:07:25

0:04:34

0:04:34

0:08:53

0:08:53

0:15:09

0:15:09

0:05:11

0:05:11

0:04:40

0:04:40

0:08:09

0:08:09

0:00:33

0:00:33

0:06:54

0:06:54

0:02:46

0:02:46

0:15:46

0:15:46

0:13:37

0:13:37

0:00:34

0:00:34