filmov

tv

how to upload on premise database data to AWS S3 | Build a Data Lake | Python

Показать описание

A data lake is a centralized cloud storage in which you can store of all the data, both structured and unstructured, at any scale. This platform is fast becoming the standard for users looking to store and process big data. we will cover how to build an AWS S3 data lake with an on-premise SQL Server database. S3 is an easy to use data store. We use it to load large amounts of data for later analysis.

Subscribe to our channel:

---------------------------------------------

Follow me on social media!

---------------------------------------------

#AWS #S3 # DataLake

Topics covered in this video:

0:00 - Intro data lake from on-premise to to AWS S3

1:03 Create S3 user with programmatic access

2:37 - Create S3 bucket

3:04 - Python setup

3:56 - Read data from SQL Server

5:04 - Load Data to S3 Bucket

6:59 - Code Demo

7:36 - Review S3 Data Lake

Subscribe to our channel:

---------------------------------------------

Follow me on social media!

---------------------------------------------

#AWS #S3 # DataLake

Topics covered in this video:

0:00 - Intro data lake from on-premise to to AWS S3

1:03 Create S3 user with programmatic access

2:37 - Create S3 bucket

3:04 - Python setup

3:56 - Read data from SQL Server

5:04 - Load Data to S3 Bucket

6:59 - Code Demo

7:36 - Review S3 Data Lake

how to upload on premise database data to AWS S3 | Build a Data Lake | Python

Get Paid Uploading Photos Using The Premise Mobile App In 2024

17. ADF | Copy files from On-premises file system into blob

Uploading on-premises data with Qlik Data Transfer

Migrating On Premise VM to AWS | VM Import/Export | Create EC2 instance based on on-premises server

How to migrate a MySQL on-prem database to AWS RDS

AWS DATASYNC | On-premises to AWS | Agent Setup | Transfer Data to S3

How To Connect To On-Premises Data In Microsoft Fabric

Tech Trends 2025 and how they would impact digital transformation initiatives

Azure - Move files from on prem to azure Blob using AZcopy utility !!!

#56. Azure Data Factory - Copy File from On Premise to Cloud

Learn how to Upload Data Files to AWS S3 via CLI Tool | S3 Sync

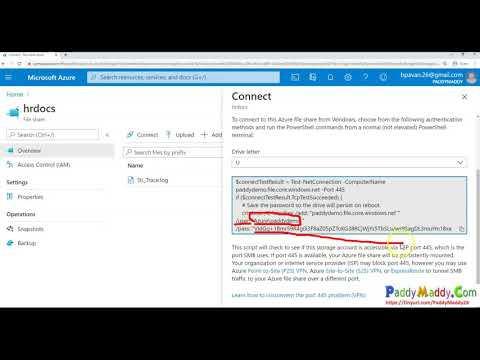

Azure FILE Share Explained with DEMO Step by step Tutorial

On-premises data with Data Factory in Microsoft Fabric

Upload files programmatically from On-premises to Azure Storage Account Using C#.Net

On Premises Storage Vs AWS Storage

Extend Quickbase Workflows to Your On-Premise Data

Power Automate - Get Files from Shared/Network Drive to SharePoint/OneDrive

EFT Arcus - On Premise File Upload and SFTP Event Rule Tutorial

Amazon S3 on Outposts: Extending S3 Into Your on-premises Environment

Data Migration from on-prem server to Snowflake Data Warehouse || How to Migrate data

How to Upload files from local to AWS S3 using Python (Boto3) API | upload_file method |Handson Demo

How to build on-premise Data Lake? | Build your own Data Lake | Open Source Tools | On-Premise

How to Upload Files to a SharePoint Site | SharePoint File Management | 2023 Tutorial

Комментарии

0:08:11

0:08:11

0:08:40

0:08:40

0:10:55

0:10:55

0:04:35

0:04:35

0:07:33

0:07:33

0:05:51

0:05:51

0:28:01

0:28:01

0:07:09

0:07:09

1:00:01

1:00:01

0:05:29

0:05:29

0:06:51

0:06:51

0:06:35

0:06:35

0:08:47

0:08:47

0:04:44

0:04:44

0:13:36

0:13:36

0:03:27

0:03:27

0:48:15

0:48:15

0:10:06

0:10:06

0:07:55

0:07:55

0:06:23

0:06:23

0:16:46

0:16:46

0:04:21

0:04:21

0:12:12

0:12:12

0:04:13

0:04:13