filmov

tv

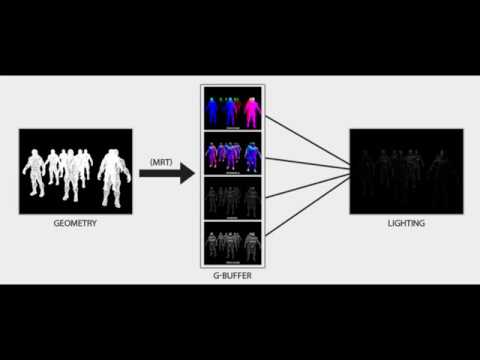

OpenGL - deferred rendering

Показать описание

All code samples, unless explicitly stated otherwise, are licensed under the terms of the CC BY-NC 4.0 license as published by Creative Commons, either version 4 of the License, or (at your option) any later version.

OpenGL - deferred rendering

Tiled Deferred Rendering OpenGL

Forward and Deferred Rendering - Cambridge Computer Science Talks

Forward vs. Deferred Shading Comparison

OpenGL Framework with Deferred Rendering

Deferred Rendering - Interactive 3D Graphics

Why you should never use deferred shading

Deferred Renderer - C++ OpenGL WIP

Deferred Rendering with Weather Simulation

Deferred Lights - Pixel Renderer Devlog #1

Deferred Rendering in OpenGL

Tutorial 05 - Implementing Deferred Rendering

Deferred Rendering - Luz Engine (Vulkan/C++) #13

Deferred Rendering in OpenGL

All OpenGL Effects!

OpenGL - Deferred rendering

Forward vs Deferred Rendering Explained

Deferred Rendering - Geometry Buffers

Deferred Rendering #1

OpenGL Multiple Point Light Shadows - Deferred Rendering Phase 2

Deferred Rendering with OpenGL

OpenGL Deferred Rendering - Sponza

Deferred Rendering with OpenGL

OpenGL Deferred Rendering - Head Mesh

Комментарии

0:00:34

0:00:34

0:27:30

0:27:30

0:00:51

0:00:51

0:01:04

0:01:04

0:01:51

0:01:51

0:30:14

0:30:14

0:02:00

0:02:00

0:01:42

0:01:42

0:08:41

0:08:41

0:00:25

0:00:25

1:13:53

1:13:53

0:00:25

0:00:25

0:05:50

0:05:50

0:30:21

0:30:21

0:00:53

0:00:53

0:07:45

0:07:45

0:00:14

0:00:14

0:00:30

0:00:30

0:01:15

0:01:15

0:01:45

0:01:45

0:00:28

0:00:28

0:02:17

0:02:17

0:00:29

0:00:29