filmov

tv

Asynchronous Hyperparameter Optimization with Apache Spark -Jim Dowling & Moritz Meister

Показать описание

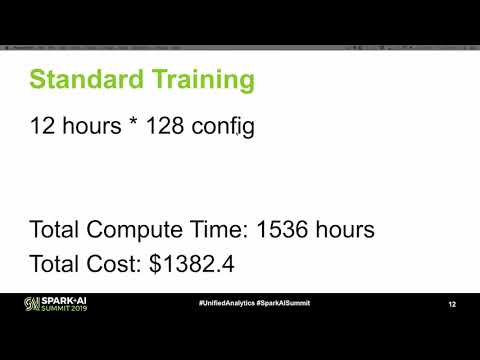

For the past two years, the open-source Hopsworks platform has used Spark to distribute hyperparameter optimization tasks for Machine Learning. Hopsworks provides some basic optimizers (gridsearch, randomsearch, differential evolution) to propose combinations of hyperparameters (trials) that are run synchronously in parallel on executors as map functions. However, many such trials perform poorly, and we waste a lot of CPU and hardware accelerator cycles on trials that could be stopped early, freeing up the resources for other trials. In this talk, we present our work on Maggy, an open-source asynchronous hyperparameter optimization framework built on Spark that transparently schedules and manages hyperparameter trials, increasing resource utilization, and massively increasing the number of trials that can be performed in a given period of time on a fixed amount of resources. Maggy is also used to support parallel ablation studies using Spark. We have commercial users evaluating Maggy and we will report on the gains they have seen in reduced time to find good hyperparameters and improved utilization of GPU hardware. Finally, we will perform a live demo on a Jupyter notebook, showing how to integrate maggy in existing PySpark applications.

About: Databricks provides a unified data analytics platform, powered by Apache Spark™, that accelerates innovation by unifying data science, engineering and business.

Connect with us:

About: Databricks provides a unified data analytics platform, powered by Apache Spark™, that accelerates innovation by unifying data science, engineering and business.

Connect with us:

0:40:10

0:40:10

0:25:02

0:25:02

0:25:02

0:25:02

0:28:35

0:28:35

0:09:51

0:09:51

0:25:43

0:25:43

0:30:03

0:30:03

0:51:54

0:51:54

0:06:26

0:06:26

![[AUTOML23] SMAC3: A](https://i.ytimg.com/vi/-qdiIRXT-14/hqdefault.jpg) 0:09:53

0:09:53

0:57:20

0:57:20

0:15:54

0:15:54

1:14:25

1:14:25

0:38:11

0:38:11

1:02:12

1:02:12

0:28:55

0:28:55

0:23:43

0:23:43

0:27:04

0:27:04

0:12:27

0:12:27

0:07:50

0:07:50

0:43:54

0:43:54

0:15:41

0:15:41

0:31:52

0:31:52

0:18:23

0:18:23