filmov

tv

Support Vector Machines - THE MATH YOU SHOULD KNOW

Показать описание

In this video, we are going to see exactly why SVMs are so versatile by getting into the math that powers it.

If you like this video and want to see more content on data Science, Machine learning, Deep Learning and AI, hit that SUBSCRIBE button. And ring that damn bell for notifications when I upload.

REFERENCES

FOLLOW ME

If you like this video and want to see more content on data Science, Machine learning, Deep Learning and AI, hit that SUBSCRIBE button. And ring that damn bell for notifications when I upload.

REFERENCES

FOLLOW ME

Support Vector Machine (SVM) in 2 minutes

Support Vector Machines Part 1 (of 3): Main Ideas!!!

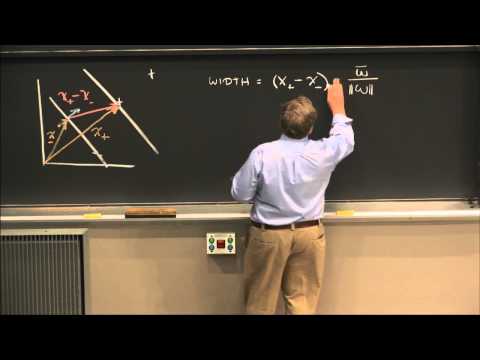

16. Learning: Support Vector Machines

Support Vector Machines - THE MATH YOU SHOULD KNOW

The Kernel Trick in Support Vector Machine (SVM)

Support Vector Machines: All you need to know!

Support Vector Machines : Data Science Concepts

Support Vector Machine (SVM) in 7 minutes - Fun Machine Learning

Linear Separability- GATE DA 24 Q53 | GATE Data Science PYQs| Machine Learning | GATE DA

Support Vector Machine - How Support Vector Machine Works | SVM In Machine Learning | Simplilearn

Support Vector Machines in Python from Start to Finish.

Support Vector Machines (SVM) - the basics | simply explained

Support Vector Machine (SVM) Basic Intuition- Part 1| Machine Learning

Machine Learning Tutorial Python - 10 Support Vector Machine (SVM)

Support Vector Machines Part 2: The Polynomial Kernel (Part 2 of 3)

Support Vector Machine - Georgia Tech - Machine Learning

Support Vector Machines (SVMs): A friendly introduction

How Support Vector Machine (SVM) Works Types of SVM Linear SVM Non-Linear SVM ML DL by Mahesh Huddar

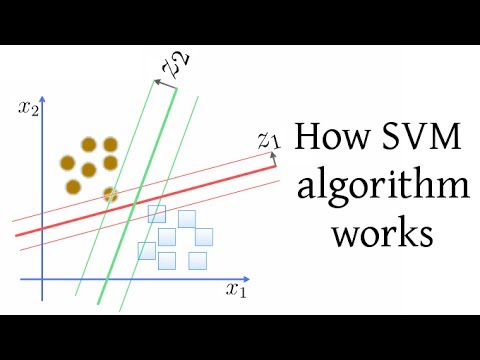

How SVM (Support Vector Machine) algorithm works

SVM (Support Vector Machine) - Algoritmos de Aprendizado de Máquinas

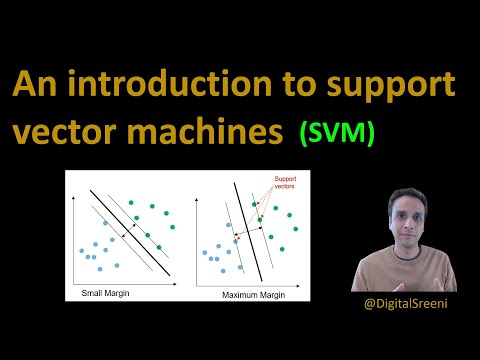

68 - Quick introduction to Support Vector Machines (SVM)

Support Vector Machines Part 3: The Radial (RBF) Kernel (Part 3 of 3)

Support vector machine in machine learning | Support vector machine python | SVM parameter tuning

Support Vector Machine (SVM) Part-1 ll Machine Learning Course Explained in Hindi

Комментарии

0:02:19

0:02:19

0:20:32

0:20:32

0:49:34

0:49:34

0:11:21

0:11:21

0:03:18

0:03:18

0:14:58

0:14:58

0:08:07

0:08:07

0:07:28

0:07:28

0:09:21

0:09:21

0:26:43

0:26:43

0:44:49

0:44:49

0:28:44

0:28:44

0:12:50

0:12:50

0:23:22

0:23:22

0:07:15

0:07:15

0:10:03

0:10:03

0:30:58

0:30:58

0:09:34

0:09:34

0:07:33

0:07:33

0:23:06

0:23:06

0:12:15

0:12:15

0:15:52

0:15:52

0:17:02

0:17:02

0:07:48

0:07:48