filmov

tv

Model Optimization | Optimize your Model | Introduction to Text Analytics with R Part 12

Показать описание

This video concludes our Introduction to Text Analytics with R and covers optimizing your model for the best generalizability on new/unseen data. It includes:

– Discussion of the sensitivity/specificity tradeoff of our optimized model.

– Potential next steps regarding feature engineering and algorithm selection for additional gains in effectiveness.

– For those who are interested, a collection of resources for further study to broaden and deepen their text analytics skills.

The data and R code used in this series is available here:

Table of Contents:

0:00 Introduction

17:18 Books recommendation

20:55 NLP task view

22:04 Information retrieval

23:47 Python

--

--

Unleash your data science potential for FREE! Dive into our tutorials, events & courses today!

--

📱 Social media links

--

Also, join our communities:

_

#modeloptimization #textanalytics #datascience

– Discussion of the sensitivity/specificity tradeoff of our optimized model.

– Potential next steps regarding feature engineering and algorithm selection for additional gains in effectiveness.

– For those who are interested, a collection of resources for further study to broaden and deepen their text analytics skills.

The data and R code used in this series is available here:

Table of Contents:

0:00 Introduction

17:18 Books recommendation

20:55 NLP task view

22:04 Information retrieval

23:47 Python

--

--

Unleash your data science potential for FREE! Dive into our tutorials, events & courses today!

--

📱 Social media links

--

Also, join our communities:

_

#modeloptimization #textanalytics #datascience

Optimize your models with TF Model Optimization Toolkit (TF Dev Summit '20)

What is AI Model Optimization | AI Model Optimization with Intel® Neural Compressor | Intel Software...

Model Optimization | Optimize your Model | Introduction to Text Analytics with R Part 12

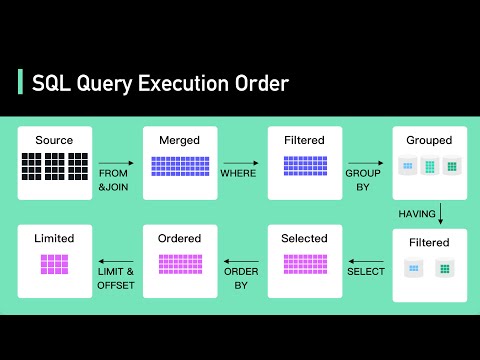

SQL Query Optimization - Tips for More Efficient Queries

Hyperparameter Optimization - The Math of Intelligence #7

Premature Optimization

Inside TensorFlow: TF Model Optimization Toolkit (Quantization and Pruning)

Secret To Optimizing SQL Queries - Understand The SQL Execution Order

👉 AITA: Revolutionize Your Media Measurement & Optimization with AI

Optimization of the Model Parameters | RapidMiner

Using The Performance Analyzer In Power BI - Model Optimization Tips

9 Tips To Optimize Your Product Pages For More Sales (Conversion Optimization)

Social Media Optimization - How to Optimize Your Social Media Profiles

Optimizing Rendering Performance in React

Fortnite Chapter 2 Remix Optimization Guide - FPS Boost & Less Delay

When to Start Optimizing Your Code | Tips & Tricks

What Is Mathematical Optimization?

BEST Optimization Guide | STALKER 2 | Max FPS | Best Settings

VRChat Avatar Tutorial - VRAM Optimization

Strategies for optimizing your BigQuery queries

Optimizing TensorFlow Models for Serving (Google Cloud AI Huddle)

Unity - TOP 10 Mobile Optimization Tips 2020 | 'Cash & Chaos' Unity Devlog #4

Neural Network Optimization Key Concepts|How to optimize your neural network

Intro to Gradient Descent || Optimizing High-Dimensional Equations

Комментарии

0:17:09

0:17:09

0:04:03

0:04:03

0:27:07

0:27:07

0:03:18

0:03:18

0:09:51

0:09:51

0:12:39

0:12:39

0:42:35

0:42:35

0:05:57

0:05:57

0:00:54

0:00:54

0:05:40

0:05:40

0:08:26

0:08:26

0:14:05

0:14:05

0:05:08

0:05:08

0:07:50

0:07:50

0:20:26

0:20:26

0:00:48

0:00:48

0:11:35

0:11:35

0:08:35

0:08:35

0:03:49

0:03:49

0:07:10

0:07:10

0:58:45

0:58:45

0:03:48

0:03:48

0:09:09

0:09:09

0:11:04

0:11:04