filmov

tv

AI Hardware, Explained.

Показать описание

In 2011, Marc Andreessen said, “software is eating the world.” And in the last year, we’ve seen a new wave of generative AI, with some apps becoming some of the most swiftly adopted software products of all time.

In this first part of our three-part series – we explore the terminology and technology that is now the backbone of the AI models taking the world by storm. We explore what GPUs are, how they work, and the key players like Nvidia competing for chip dominance.

Look out for the rest of our series, where we dive even deeper; covering supply and demand mechanics, where open source plays a role, and of course… how much all of this truly costs!

Topics Covered:

00:00 – AI terminology and technology

03:54 – Chips, semiconductors, servers, and compute

05:07 – CPUs and GPUs

06:16 – Future architecture and performance

07:12 –The hardware ecosystem

09:20 – Software optimizations

11:45 –What do we expect for the future?

14:25 – Upcoming episodes on market dynamics and cost

Resources:

In this first part of our three-part series – we explore the terminology and technology that is now the backbone of the AI models taking the world by storm. We explore what GPUs are, how they work, and the key players like Nvidia competing for chip dominance.

Look out for the rest of our series, where we dive even deeper; covering supply and demand mechanics, where open source plays a role, and of course… how much all of this truly costs!

Topics Covered:

00:00 – AI terminology and technology

03:54 – Chips, semiconductors, servers, and compute

05:07 – CPUs and GPUs

06:16 – Future architecture and performance

07:12 –The hardware ecosystem

09:20 – Software optimizations

11:45 –What do we expect for the future?

14:25 – Upcoming episodes on market dynamics and cost

Resources:

AI Hardware, Explained.

AI’s Hardware Problem

AI Hardware Explained (CPU, GPU and NPU)!

Different Types Of AI Hardware

The Coming AI Chip Boom

The Hard Tradeoffs of Edge AI Hardware

Deep-dive into the AI Hardware of ChatGPT

Groq: Is it the Fastest AI Chip in the World?

This NEW AI Startup SHOCKED The ENTIRE AI INDUSTRY!

How Chips That Power AI Work | WSJ Tech Behind

AI vs Machine Learning

Memristors for Analog AI Chips

GPUs: Explained

What runs ChatGPT? Inside Microsoft's AI supercomputer | Featuring Mark Russinovich

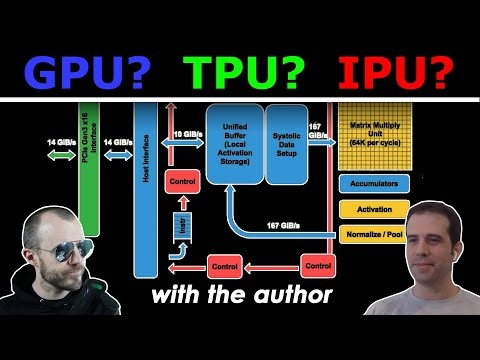

All about AI Accelerators: GPU, TPU, Dataflow, Near-Memory, Optical, Neuromorphic & more (w/ Aut...

AI In Hardware

How Nvidia Grew From Gaming To A.I. Giant, Now Powering ChatGPT

HPU vs GPU - The Frontier of AI Hardware

NVIDIA HGX H100 | The most powerful end-to-end AI supercomputing platform

How AI works, using very simple words

Analog AI Accelerators Explained

How I'd Learn AI in 2024 (if I could start over)

Hardware Acceleration for AI at the Edge

Apple Shocks Again: Introducing OpenELM - Open Source AI Model That Changes Everything!

Комментарии

0:15:24

0:15:24

0:16:47

0:16:47

0:03:24

0:03:24

0:10:44

0:10:44

0:15:41

0:15:41

0:14:11

0:14:11

0:20:15

0:20:15

0:13:36

0:13:36

0:48:09

0:48:09

0:06:29

0:06:29

0:05:49

0:05:49

0:16:25

0:16:25

0:07:29

0:07:29

0:16:28

0:16:28

1:02:35

1:02:35

0:11:56

0:11:56

0:17:54

0:17:54

0:05:36

0:05:36

0:01:00

0:01:00

0:04:37

0:04:37

0:15:54

0:15:54

0:17:55

0:17:55

0:16:14

0:16:14

0:08:16

0:08:16