filmov

tv

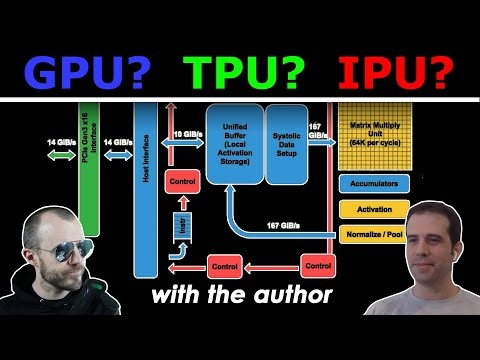

All about AI Accelerators: GPU, TPU, Dataflow, Near-Memory, Optical, Neuromorphic & more (w/ Author)

Показать описание

#ai #gpu #tpu

This video is an interview with Adi Fuchs, author of a series called "AI Accelerators", and an expert in modern AI acceleration technology.

Accelerators like GPUs and TPUs are an integral part of today's AI landscape. Deep Neural Network training can be sped up by orders of magnitudes by making good use of these specialized pieces of hardware. However, GPUs and TPUs are only the beginning of a vast landscape of emerging technologies and companies that build accelerators for the next generation of AI models. In this interview, we go over many aspects of building hardware for AI, including why GPUs have been so successful, what the most promising approaches look like, how they work, and what the main challenges are.

OUTLINE:

0:00 - Intro

5:10 - What does it mean to make hardware for AI?

8:20 - Why were GPUs so successful?

16:25 - What is "dark silicon"?

20:00 - Beyond GPUs: How can we get even faster AI compute?

28:00 - A look at today's accelerator landscape

30:00 - Systolic Arrays and VLIW

35:30 - Reconfigurable dataflow hardware

40:50 - The failure of Wave Computing

42:30 - What is near-memory compute?

46:50 - Optical and Neuromorphic Computing

49:50 - Hardware as enabler and limiter

55:20 - Everything old is new again

1:00:00 - Where to go to dive deeper?

Read the full blog series here:

Links:

If you want to support me, the best thing to do is to share out the content :)

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

This video is an interview with Adi Fuchs, author of a series called "AI Accelerators", and an expert in modern AI acceleration technology.

Accelerators like GPUs and TPUs are an integral part of today's AI landscape. Deep Neural Network training can be sped up by orders of magnitudes by making good use of these specialized pieces of hardware. However, GPUs and TPUs are only the beginning of a vast landscape of emerging technologies and companies that build accelerators for the next generation of AI models. In this interview, we go over many aspects of building hardware for AI, including why GPUs have been so successful, what the most promising approaches look like, how they work, and what the main challenges are.

OUTLINE:

0:00 - Intro

5:10 - What does it mean to make hardware for AI?

8:20 - Why were GPUs so successful?

16:25 - What is "dark silicon"?

20:00 - Beyond GPUs: How can we get even faster AI compute?

28:00 - A look at today's accelerator landscape

30:00 - Systolic Arrays and VLIW

35:30 - Reconfigurable dataflow hardware

40:50 - The failure of Wave Computing

42:30 - What is near-memory compute?

46:50 - Optical and Neuromorphic Computing

49:50 - Hardware as enabler and limiter

55:20 - Everything old is new again

1:00:00 - Where to go to dive deeper?

Read the full blog series here:

Links:

If you want to support me, the best thing to do is to share out the content :)

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

Комментарии

1:02:35

1:02:35

0:05:32

0:05:32

0:46:25

0:46:25

0:15:24

0:15:24

0:42:25

0:42:25

0:03:13

0:03:13

0:03:49

0:03:49

1:32:34

1:32:34

0:57:27

0:57:27

0:14:03

0:14:03

0:15:24

0:15:24

0:00:57

0:00:57

0:03:50

0:03:50

0:15:54

0:15:54

0:01:34

0:01:34

0:15:41

0:15:41

0:10:49

0:10:49

0:06:52

0:06:52

0:20:30

0:20:30

0:07:22

0:07:22

0:19:43

0:19:43

0:26:08

0:26:08

0:22:43

0:22:43

0:02:07

0:02:07