filmov

tv

6. Log Pipeline Executions to SQL Table using Azure Data Factory

Показать описание

In this video, I discussed about logging pipeline execution details to SQL Table using Azure Data Factory

Link for Azure Functions Play list:

Link for Azure Basics Play list:

Link for Azure Data factory Play list:

#Azure #ADF #AzureDataFactory

Link for Azure Functions Play list:

Link for Azure Basics Play list:

Link for Azure Data factory Play list:

#Azure #ADF #AzureDataFactory

6. Log Pipeline Executions to SQL Table using Azure Data Factory

10. Log Pipeline Executions to file Using Mapping Data Flows in Azure Data Factory

23. Log pipeline execution details in azure data factory

24. Log pipeline execution details using stored procedure in azure data factory

Getting Log Pipeline execution details to a single file in Azure Data Factory

20 Log Pipeline Executions to SQL Table using Azure Data Factory

How to Log Pipeline Audit Data for Success and Failure in Azure Data Factory - ADF Tutorial 2021

How to see the build logs or output in Azure pipeline build

Logging in Azure Data Factory: Save logs into Database - Pipeline/Session Logs

Log ADF Pipeline Details | Azure Data Factory | Azure Data Engineer Training #adf #azure

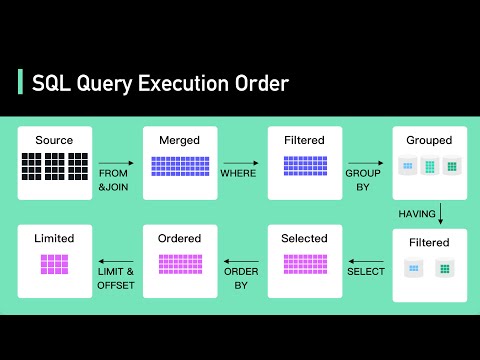

Secret To Optimizing SQL Queries - Understand The SQL Execution Order

How to Rerun Pipeline from Point of Failure in adf | check points in adf | adf tutorial part 75

ADF Custom Events for file watcher and pipeline chaining

The Fetch-Execute Cycle: What's Your Computer Actually Doing?

Where Will You See The Pipeline Runs and Trigger |Azure Data Factory Interview Questions & Answe...

23. Session log in copy activity of ADF pipeline

22. Execute Pipeline in ADF | Azure Data Factory

99. Create Alert rules in Azure Data factory for Pipeline or activity Failures #azuredatafactory

22. Fault tolerance in copy activity of ADF pipeline

How To Add LOGGING to Your Python Projects for Beginners

30. Execute Pipeline Activity in Azure Data Factory | Azure Data Factory Tutorial | TechTake

Adding Docker Compose Logs to Your CI Pipeline Is Worth It

Coding for 1 Month Versus 1 Year #shorts #coding

Great Work Pipeline Hot Tap Installation and Best Teamwork to Hot Tapping and Plugging

Комментарии

0:10:56

0:10:56

0:33:29

0:33:29

0:16:30

0:16:30

0:17:22

0:17:22

0:13:53

0:13:53

1:28:06

1:28:06

0:16:54

0:16:54

0:02:19

0:02:19

0:23:33

0:23:33

0:08:53

0:08:53

0:05:57

0:05:57

0:07:14

0:07:14

0:07:01

0:07:01

0:09:04

0:09:04

0:05:06

0:05:06

0:15:18

0:15:18

0:08:02

0:08:02

0:11:15

0:11:15

0:16:00

0:16:00

0:06:13

0:06:13

0:05:54

0:05:54

0:08:27

0:08:27

0:00:24

0:00:24

0:12:53

0:12:53