filmov

tv

10. Log Pipeline Executions to file Using Mapping Data Flows in Azure Data Factory

Показать описание

In this video, I discussed about Logging Pipeline Executions to File using Mapping data flows in Azure Data Factory

Link for Azure Databricks Play list:

Link for Azure Functions Play list:

Link for Azure Basics Play list:

Link for Azure Data factory Play list:

Link for Azure Data Factory Real time Scenarios

Link for Azure LogicApps playlist

#Azure #ADF #AzureDataFactory

Link for Azure Databricks Play list:

Link for Azure Functions Play list:

Link for Azure Basics Play list:

Link for Azure Data factory Play list:

Link for Azure Data Factory Real time Scenarios

Link for Azure LogicApps playlist

#Azure #ADF #AzureDataFactory

10. Log Pipeline Executions to file Using Mapping Data Flows in Azure Data Factory

6. Log Pipeline Executions to SQL Table using Azure Data Factory

23. Log pipeline execution details in azure data factory

24. Log pipeline execution details using stored procedure in azure data factory

Getting Log Pipeline execution details to a single file in Azure Data Factory

How to Log Pipeline Audit Data for Success and Failure in Azure Data Factory - ADF Tutorial 2021

20 Log Pipeline Executions to SQL Table using Azure Data Factory

Azure Data Factory Triggers Tutorial | On-demand, scheduled and event based execution

Logging in Azure Data Factory: Save logs into Database - Pipeline/Session Logs

How to see the build logs or output in Azure pipeline build

#10. Azure Data Bricks - Send exception/error message from Notebook to Pipeline

CI CD Pipeline Explained in 2 minutes With Animation!

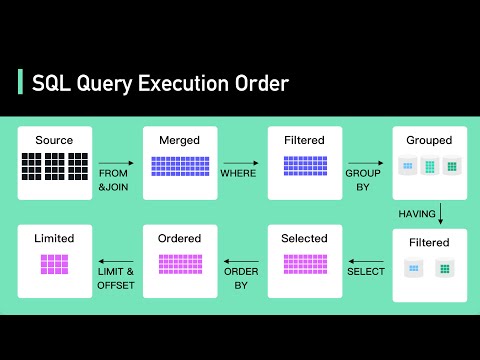

Secret To Optimizing SQL Queries - Understand The SQL Execution Order

ADF Custom Events for file watcher and pipeline chaining

How to Rerun Pipeline from Point of Failure in adf | check points in adf | adf tutorial part 75

Log ADF Pipeline Details | Azure Data Factory | Azure Data Engineer Training #adf #azure

The Definitive Guide to WBPP: Pipeline Processing Container (script)

What is Data Pipeline | How to design Data Pipeline ? - ETL vs Data pipeline (2024)

23. Session log in copy activity of ADF pipeline

30. Get Error message of Failed activities in Pipeline in Azure Data Factory

Pipeline Execution 15.10 Refinement/Planning Kickoff

Great Work Pipeline Hot Tap Installation and Best Teamwork to Hot Tapping and Plugging

Hacking CI/CD (Basic Pipeline Poisoning)

My Jobs Before I was a Project Manager

Комментарии

0:33:29

0:33:29

0:10:56

0:10:56

0:16:30

0:16:30

0:17:22

0:17:22

0:13:53

0:13:53

0:16:54

0:16:54

1:28:06

1:28:06

0:21:39

0:21:39

0:23:33

0:23:33

0:02:19

0:02:19

0:04:20

0:04:20

0:02:03

0:02:03

0:05:57

0:05:57

0:07:01

0:07:01

0:07:14

0:07:14

0:08:53

0:08:53

0:11:52

0:11:52

0:10:34

0:10:34

0:15:18

0:15:18

0:12:12

0:12:12

0:04:21

0:04:21

0:12:53

0:12:53

0:22:41

0:22:41

0:00:15

0:00:15