filmov

tv

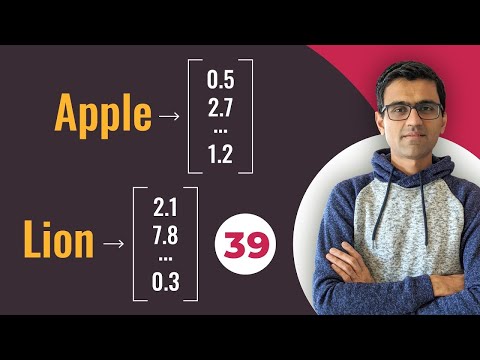

Text Classification with Word Embeddings

Показать описание

So, if this is a bit complicated then luckily Carris supports all this functionality in one single layer which is quite Embedding. So, embedding takes an input of a certain dimension and actually creates a lot of measure of presentation for us. So now, we have a vocabulary of a thousand possible words and we have three sentences and each sentence contains exactly five words. So, no need for padding in this simple example. And this should give us a three-by-five matrix which is actually correct because we have three sentences and five words per sentence. And instead of words, we have integer representations of the words so that is actually correct here. So, the output is of shape three by five by three, so it's a 3D Tensor. It can be three sentences and five words per sentence. But now each word is not represented as a single dimensional integer scaler but it is represented as a vector of size three because it defined that if you compress this high dimensional space into a lower dimensional space of size three, of dimensional relative three.

The initial lectures series on this topic can find in the below links:

Introduction to Anomaly Detection

How to implement an anomaly detector (1/2)

How to implement an anomaly detector (2/2)

How to deploy a real-time anomaly detector

Introduction to Time Series Forecasting

Stateful vs. Stateless LSTMs

Batch Size! which batch size is to choose?

Number of Time Steps, Epochs, Training and Validation

Batch size and Trainin Set Size

Input and Output Data Construction

Designing the LSTM network in Keras

Anatomy of a LSTM Node

Number of Parameters:How LSTM Parmeter Num is Computed.

Training and loading a saved model.

The initial lectures series on this topic can find in the below links:

Introduction to Anomaly Detection

How to implement an anomaly detector (1/2)

How to implement an anomaly detector (2/2)

How to deploy a real-time anomaly detector

Introduction to Time Series Forecasting

Stateful vs. Stateless LSTMs

Batch Size! which batch size is to choose?

Number of Time Steps, Epochs, Training and Validation

Batch size and Trainin Set Size

Input and Output Data Construction

Designing the LSTM network in Keras

Anatomy of a LSTM Node

Number of Parameters:How LSTM Parmeter Num is Computed.

Training and loading a saved model.

0:04:33

0:04:33

0:31:43

0:31:43

0:22:02

0:22:02

0:16:12

0:16:12

0:08:11

0:08:11

0:08:11

0:08:11

0:20:59

0:20:59

0:04:33

0:04:33

0:11:01

0:11:01

0:28:38

0:28:38

0:24:30

0:24:30

1:34:01

1:34:01

0:02:09

0:02:09

0:46:53

0:46:53

0:02:50

0:02:50

0:16:14

0:16:14

0:15:08

0:15:08

0:23:03

0:23:03

0:16:56

0:16:56

0:36:08

0:36:08

0:36:06

0:36:06

2:01:39

2:01:39

0:11:32

0:11:32

1:11:27

1:11:27