filmov

tv

How to Stream Data using Apache Kafka & Debezium from Postgres | Real Time ETL | ETL | Part 2

Показать описание

In this video we will set up database streaming from Postgres database to Apache Kafka. In the previous session we installed Apache Kafka, Debezium and the rest of the required components, and configured the Postgres database for data streaming. Today we will configure a the Database and Debezium Connector and start Streaming data from our Postgres database.

#apachekafka #DataStreaming #etl

💥Subscribe to our channel:

📌 Links

-----------------------------------------

#️⃣ Follow me on social media! #️⃣

-----------------------------------------

Topics covered in this video:

0:00 - Introduction Apache Kafka, Debezium and requirements

0:29 - Postgres Table Creation

2:22 - Python Data Insert Script

3:13 - Kafka Connect API Client

3:41- VS Code API Client Install

5:03 - Postgres Kafka Connector

7:38 - Kafka Topics & Insert row for topic creation

8:53 - Stream Data to Kafka

#apachekafka #DataStreaming #etl

💥Subscribe to our channel:

📌 Links

-----------------------------------------

#️⃣ Follow me on social media! #️⃣

-----------------------------------------

Topics covered in this video:

0:00 - Introduction Apache Kafka, Debezium and requirements

0:29 - Postgres Table Creation

2:22 - Python Data Insert Script

3:13 - Kafka Connect API Client

3:41- VS Code API Client Install

5:03 - Postgres Kafka Connector

7:38 - Kafka Topics & Insert row for topic creation

8:53 - Stream Data to Kafka

Stream Processing 101 | Basics

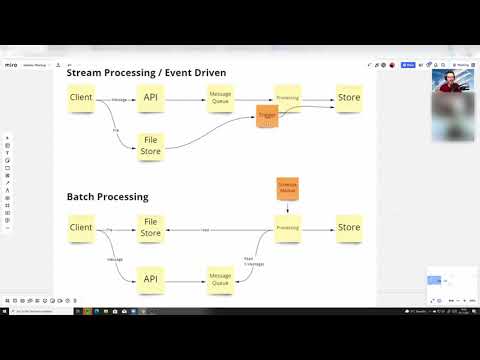

Stream vs Batch processing explained with examples

What is Stream Processing? | Batch vs Stream Processing | Data Pipelines | Real-Time Data Processing

Azure Stream Analytics Tutorial | Processing stream data with SQL

Process Real-Time Data Streams in Minutes using Azure Stream Analytics' No-Code Editor Experien...

Batch Processing vs Stream Processing | System Design Primer | Tech Primers

Snowflake Stream & Change Data Capture | Chapter-17 | Snowflake Hands-on Tutorial

How to process stream data on Apache Beam

Efficient Streaming Language Models with Attention Sinks

Use Your Cell Phone Data to Stream TV

Stream Processing with Apache Flink on CDP

Intro to Stream Processing with Apache Flink | Apache Flink 101

Creating Stream processing application using Spark and Kafka in Scala | Spark Streaming Course

Using async generators to stream data in JavaScript

Create a data stream on AWS w/ Kinesis!

[Code Demo] Using Response Stream from Fetch API | JSer - Front-End Interview questions

25. Reading the Stream using getReader method of Readable Stream - Fetch API - AJAX

Building stream processing pipelines with Dataflow

Stream API in Java

Kafka Streams | Real-time Stream Processing using Kafka Streams API | Master Class Introduction

Click Stream Data Analysis

How to Stream Data using Apache Kafka & Debezium from Postgres | Real Time ETL | ETL | Part 2

Stream Processing Pipeline - Using Pub/Sub, Dataflow & BigQuery

How to stream data from MySQL to Apache Kafka® | Kafka Tutorial

Комментарии

0:10:17

0:10:17

0:09:02

0:09:02

0:06:20

0:06:20

0:24:18

0:24:18

0:06:36

0:06:36

0:13:37

0:13:37

0:27:52

0:27:52

0:11:22

0:11:22

0:35:50

0:35:50

0:08:14

0:08:14

0:05:31

0:05:31

0:07:50

0:07:50

0:17:41

0:17:41

0:27:37

0:27:37

0:05:50

0:05:50

![[Code Demo] Using](https://i.ytimg.com/vi/0NIf080gejk/hqdefault.jpg) 0:07:04

0:07:04

0:09:52

0:09:52

0:15:17

0:15:17

0:26:04

0:26:04

0:04:03

0:04:03

0:06:58

0:06:58

0:09:56

0:09:56

0:05:23

0:05:23

0:20:04

0:20:04