filmov

tv

Actor Critic Algorithms

Показать описание

Reinforcement learning is hot right now! Policy gradients and deep q learning can only get us so far, but what if we used two networks to help train and AI instead of one? Thats the idea behind actor critic algorithms. I'll explain how they work in this video using the 'Doom" shooting game as an example.

Code for this video:

i-Nickk's winning code:

Vignesh's runner up code:

Taryn's Twitter:

More learning resources:

Please Subscribe! And like. And comment. That's what keeps me going.

Want more inspiration & education? Connect with me:

Join us in the Wizards Slack channel:

And please support me on Patreon:

Signup for my newsletter for exciting updates in the field of AI:

Code for this video:

i-Nickk's winning code:

Vignesh's runner up code:

Taryn's Twitter:

More learning resources:

Please Subscribe! And like. And comment. That's what keeps me going.

Want more inspiration & education? Connect with me:

Join us in the Wizards Slack channel:

And please support me on Patreon:

Signup for my newsletter for exciting updates in the field of AI:

Actor Critic Algorithms

Everything You Need To Master Actor Critic Methods | Tensorflow 2 Tutorial

Reinforcement Learning Course: Intro to Advanced Actor Critic Methods

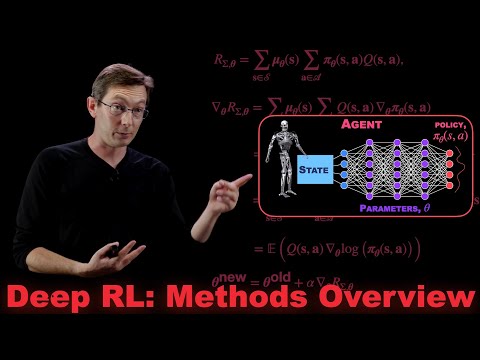

Overview of Deep Reinforcement Learning Methods

Actor-Critic Reinforcement for continuous actions!

Actor Critic Methods Foundations

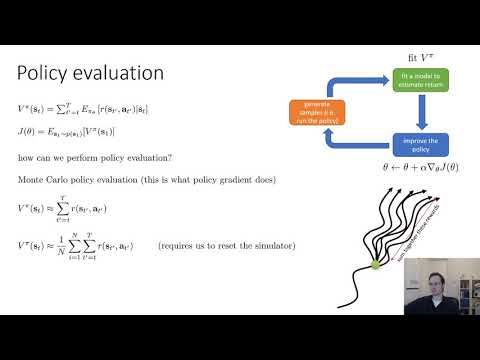

CS 182: Lecture 16: Part 1: Actor-Critic & Q-Learning

Actor-Critic Algorithms

Reinforcement Learning 6: Policy Gradients and Actor Critics

CS885 Lecture 7b: Actor Critic

Actor-Critic | The Hitchhiker's Guide to Machine Learning Algorithms

Advantage Actor Critic (A2C) Reinforcement Learning in Python with TF | OpenAIGym

Asynchronous Advantage Actor-Critic in 60 Seconds | Machine Learning Algorithms

Actor Critic and REINFORCE

Actor Critic Algorithm Introduction

What is Actor-Critic?

Advantage Actor-Critic (A2C) algorithm explained with codes and example in reinforcement learning

Soft Actor-Critic: a beginner-friendly introduction

Reinforcement Learning Actor-Critic different algorithms PPO, DDPG, SAC

DeepMind x UCL RL Lecture Series - Policy-Gradient and Actor-Critic methods [9/13]

Reinforcement Learning 23 - REINFORCE & Actor-Critic Methods

Actor-Critic Model Predictive Control (Talk ICRA 2024)

L5 DDPG and SAC (Foundations of Deep RL Series)

AI learns how to land on the moon (Continuous Actor critic, reinforcement learning)

Комментарии

0:09:44

0:09:44

0:40:47

0:40:47

5:54:32

5:54:32

0:24:50

0:24:50

0:07:37

0:07:37

0:05:13

0:05:13

0:26:31

0:26:31

0:26:00

0:26:00

1:34:41

1:34:41

0:35:06

0:35:06

0:01:46

0:01:46

0:14:07

0:14:07

0:00:51

0:00:51

0:12:49

0:12:49

0:04:03

0:04:03

0:11:50

0:11:50

0:16:20

0:16:20

0:06:15

0:06:15

0:08:22

0:08:22

1:38:50

1:38:50

0:35:53

0:35:53

0:06:59

0:06:59

0:12:12

0:12:12

0:00:58

0:00:58