filmov

tv

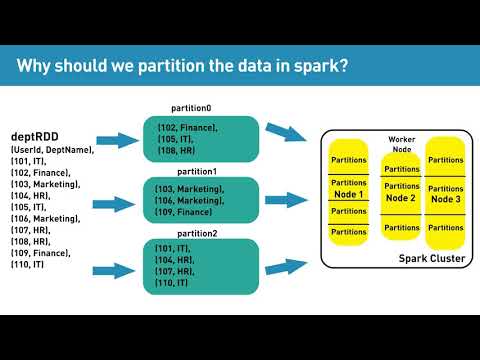

How Partitioning Works In Apache Spark?

Показать описание

Welcome back to our comprehensive series on Apache Spark performance optimization techniques! In today's episode, we dive deep into the world of partitioning in Spark - a crucial concept for anyone looking to master Apache Spark for big data processing.

🔥 What's Inside:

1. Partitioning Basics in Spark: Understand the fundamental principles of partitioning in Apache Spark and why it's essential for performance tuning.

2. Coding Partitioning in Spark: Step-by-step guide on implementing partitioning in your Spark applications using Python. Perfect for both beginners and experienced developers.

3. How Partitioning Enhances Performance: Discover how strategic partitioning leads to faster and easier access to data, improving overall application performance.

4. Smart Resource Allocation: Learn how partitioning in Spark allocates resources for optimised execution.

5. Choosing the Right Partition Key: A comprehensive guide to selecting the most effective partition key for your Spark application.

🌟 Whether you're preparing for Spark interview questions, starting your journey with our Apache Spark beginner tutorial, or looking to enhance your skills in Apache Spark, this video is for you.

📚 Keep Learning:

Chapters:

00:00 Introduction

02:22 Code for understanding partitioning

05:44 Problems that partitioning solves

09:48 Factors to consider when choosing a partition column

13:36 Code to show single/multi level partitioning

22:09 Thank you

#ApacheSparkTutorial #SparkPerformanceTuning #ApacheSparkPython #LearnApacheSpark #SparkInterviewQuestions #ApacheSparkCourse #PerformanceTuningInPySpark #ApacheSparkPerformanceOptimization #dataengineering #interviewquestions #dataengineerinterviewquestions #azuredataengineer #dataanalystinterview

🔥 What's Inside:

1. Partitioning Basics in Spark: Understand the fundamental principles of partitioning in Apache Spark and why it's essential for performance tuning.

2. Coding Partitioning in Spark: Step-by-step guide on implementing partitioning in your Spark applications using Python. Perfect for both beginners and experienced developers.

3. How Partitioning Enhances Performance: Discover how strategic partitioning leads to faster and easier access to data, improving overall application performance.

4. Smart Resource Allocation: Learn how partitioning in Spark allocates resources for optimised execution.

5. Choosing the Right Partition Key: A comprehensive guide to selecting the most effective partition key for your Spark application.

🌟 Whether you're preparing for Spark interview questions, starting your journey with our Apache Spark beginner tutorial, or looking to enhance your skills in Apache Spark, this video is for you.

📚 Keep Learning:

Chapters:

00:00 Introduction

02:22 Code for understanding partitioning

05:44 Problems that partitioning solves

09:48 Factors to consider when choosing a partition column

13:36 Code to show single/multi level partitioning

22:09 Thank you

#ApacheSparkTutorial #SparkPerformanceTuning #ApacheSparkPython #LearnApacheSpark #SparkInterviewQuestions #ApacheSparkCourse #PerformanceTuningInPySpark #ApacheSparkPerformanceOptimization #dataengineering #interviewquestions #dataengineerinterviewquestions #azuredataengineer #dataanalystinterview

Комментарии

0:22:18

0:22:18

0:04:23

0:04:23

0:06:24

0:06:24

0:06:41

0:06:41

0:07:03

0:07:03

0:05:13

0:05:13

0:00:59

0:00:59

0:09:13

0:09:13

0:06:04

0:06:04

0:03:43

0:03:43

0:09:15

0:09:15

0:05:53

0:05:53

0:08:50

0:08:50

0:04:53

0:04:53

0:00:59

0:00:59

0:00:46

0:00:46

0:06:48

0:06:48

0:04:25

0:04:25

0:15:40

0:15:40

0:13:40

0:13:40

0:01:55

0:01:55

0:24:02

0:24:02

0:07:54

0:07:54

0:05:02

0:05:02