filmov

tv

Why Benchmarking CPUs with the GTX 1080 Ti is NOT STUPID!

Показать описание

Check prices now:

Read the written version of this editorial on TechSpot:

Support us on Patreon

Why Benchmarking CPUs with the GTX 1080 Ti is NOT STUPID!

Disclaimer: Any pricing information shown or mentioned in this video was accurate at the time of video production, and may have since changed

Disclosure: As an Amazon Associate we earn from qualifying purchases. We may also earn a commission on some sales made through other store links

FOLLOW US IN THESE PLACES FOR UPDATES

Read the written version of this editorial on TechSpot:

Support us on Patreon

Why Benchmarking CPUs with the GTX 1080 Ti is NOT STUPID!

Disclaimer: Any pricing information shown or mentioned in this video was accurate at the time of video production, and may have since changed

Disclosure: As an Amazon Associate we earn from qualifying purchases. We may also earn a commission on some sales made through other store links

FOLLOW US IN THESE PLACES FOR UPDATES

What's the Best CPU Benchmark?

Why Benchmarking CPUs with the GTX 1080 Ti is NOT STUPID!

Why Do PC Gamers Love Benchmarks?

Wasted Opportunity: AMD Ryzen 7 9700X CPU Review & Benchmarks vs. 7800X3D, 7700X, & More

Best Benchmarks for Your New Gaming PC Build

Intel's Major Overhaul for CPU & GPU Benchmarking | 'GPU Busy' & Pipeline Tec...

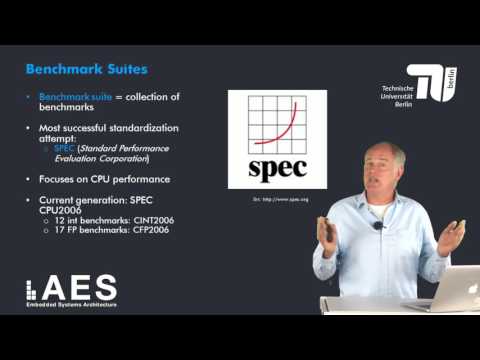

1 1 3 Benchmarks

We're Not Supposed to Have This: Core i5-12400 ES Benchmarks & Preview (Engineering Sample)

DEEPCOOL LD240 Liquid Cooler VS Ryzen 9 7900X3D

How a CPU Works in 100 Seconds // Apple Silicon M1 vs Intel i9

AMD Ryzen 9 9950X CPU Review & Benchmarks vs. 7950X, 9700X, 14900K, & More

I blew up a CPU while benchmarking... FAIL

CPU Voltage Confusion #shorts #pc #vcore #stresstest #benchmarking #benchmark #cpu #pctips #techtok

Benchmarking Ryzen & GPU Bottlenecks

AMD Ryzen 9 9900X CPU Review & Benchmarks vs. 14700K, 7900X, 9950X, & More

Intel Core Ultra 7 265K CPU Review & Benchmarks vs. 285K, 245K, 7800X3D, 7900X, & More

How to Do Blender 3D Benchmarks and Compare GPUs and CPUs

What is Benchmarking and Benchmark Tests ?? ⚡️

How to Choose the RIGHT CPU for Blender | Real-World Benchmarks

The Dark Art Of CPU Benchmarking

Arrow Lake Benchmarks

CPU-Benchmarks in UHD: Wie wichtig ist der Prozessor? #shorts

RTX 4070 SUPER & i5 14600k Benchmarks: What to Expect

AMD's Silent Launch: Ryzen 5 7600X3D CPU Review & Benchmarks vs. 7800X3D, 5700X3D, 9800X3D

Комментарии

0:10:22

0:10:22

0:10:09

0:10:09

0:00:45

0:00:45

0:33:44

0:33:44

0:00:54

0:00:54

0:27:07

0:27:07

0:10:04

0:10:04

0:19:46

0:19:46

0:00:25

0:00:25

0:12:44

0:12:44

0:29:59

0:29:59

0:09:40

0:09:40

0:00:21

0:00:21

0:09:41

0:09:41

0:32:19

0:32:19

0:26:21

0:26:21

0:02:53

0:02:53

0:00:56

0:00:56

0:10:38

0:10:38

0:40:51

0:40:51

0:00:44

0:00:44

0:00:58

0:00:58

0:00:27

0:00:27

0:26:14

0:26:14