filmov

tv

I explain Fully Sharded Data Parallel (FSDP) and pipeline parallelism in 3D with Vision Pro

Показать описание

Build intuition about how scaling massive LLMs works. I cover two techniques for making LLM models train very fast, fully Sharded Data Parallel (FSDP) and pipeline parallelism in 3D with the Vision Pro. I'm excited to see how AR can help teach complex ideas easily.

Long time dream of mine to show conceptually, how I visualize these systems.

Chapters:

00:00 Introduction

01:02 Two machines each with 2 GPUs

01:37 Transformer models blocks

02:02 Forward pass

02:10 Backward pass

02:43 Fully Sharded Data Parallel introduction

02:51 Layer sharding

03:30 Weight concat

05:25 Memory upper bound

05:58 Why more GPUs speed up training

07:23 Shard across nodes (machines)

09:20 Sharding a block across nodes

10:14 Another way of seeing sharding

11:30 Understand interconnect bottleneck

12:00 Hybrid sharding

15:00 Pipeline parallelism

16:04 Forward pass in pipeline parallelism

16:10 Intuition around pipeline parallelism

16:50 Future directions on pipeline parallelism

Long time dream of mine to show conceptually, how I visualize these systems.

Chapters:

00:00 Introduction

01:02 Two machines each with 2 GPUs

01:37 Transformer models blocks

02:02 Forward pass

02:10 Backward pass

02:43 Fully Sharded Data Parallel introduction

02:51 Layer sharding

03:30 Weight concat

05:25 Memory upper bound

05:58 Why more GPUs speed up training

07:23 Shard across nodes (machines)

09:20 Sharding a block across nodes

10:14 Another way of seeing sharding

11:30 Understand interconnect bottleneck

12:00 Hybrid sharding

15:00 Pipeline parallelism

16:04 Forward pass in pipeline parallelism

16:10 Intuition around pipeline parallelism

16:50 Future directions on pipeline parallelism

I explain Fully Sharded Data Parallel (FSDP) and pipeline parallelism in 3D with Vision Pro

How Fully Sharded Data Parallel (FSDP) works?

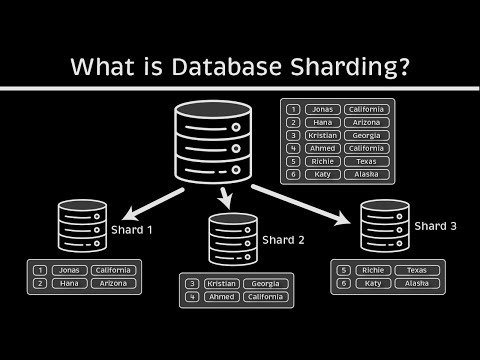

What is Database Sharding?

Database Sharting Explained

[Long Review] Fully Sharded Data Parallel: faster AI training with fewer GPUs

[Short Review] Fully Sharded Data Parallel: faster AI training with fewer GPUs

7 Must-know Strategies to Scale Your Database

What is DATABASE SHARDING?

NoSQL vs SQL: What's better?

Part 2: What is Distributed Data Parallel (DDP)

What is Database Sharding?

Manual sharding vs Automatic sharding

Too Big to Train: Large model training in PyTorch with Fully Sharded Data Parallel

Advantages of Using NoSQL Databases

The Basics of Database Sharding and Partitioning in System Design

FSDP Production Readiness

Database Sharding and Partitioning

Choosing the Right Database for System Design

Scaling Cassandra and MySQL

A friendly introduction to distributed training (ML Tech Talks)

6 SQL Joins you MUST know! (Animated + Practice)

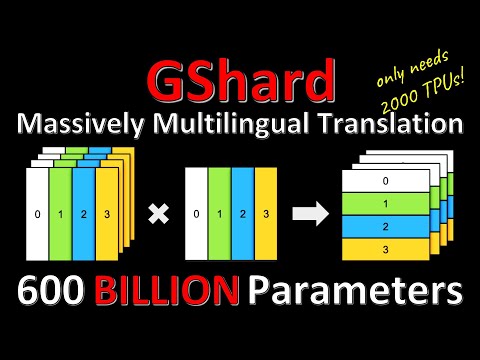

GShard: Scaling Giant Models with Conditional Computation and Automatic Sharding (Paper Explained)

Key Based Sharding

Important sharding techniques.

Комментарии

0:18:11

0:18:11

0:32:31

0:32:31

0:09:05

0:09:05

0:00:32

0:00:32

![[Long Review] Fully](https://i.ytimg.com/vi/NiL7egqyJEI/hqdefault.jpg) 0:33:24

0:33:24

![[Short Review] Fully](https://i.ytimg.com/vi/X8aSwVhiaLU/hqdefault.jpg) 0:03:16

0:03:16

0:08:42

0:08:42

0:08:56

0:08:56

0:00:45

0:00:45

0:03:16

0:03:16

0:26:56

0:26:56

0:00:57

0:00:57

0:47:34

0:47:34

0:00:23

0:00:23

0:06:02

0:06:02

0:05:17

0:05:17

0:23:53

0:23:53

0:00:51

0:00:51

0:19:18

0:19:18

0:24:19

0:24:19

0:09:47

0:09:47

1:13:04

1:13:04

0:00:21

0:00:21

0:00:43

0:00:43