filmov

tv

Sam Altman on OpenAI, Future Risks and Rewards, and Artificial General Intelligence

Показать описание

If 2023 was the year artificial intelligence became a household topic of conversation, it’s in many ways because of Sam Altman, CEO of the artificial intelligence research organization OpenAI. Altman, who was named TIME’s 2023 “CEO of the Year” spoke candidly about his November ousting—and reinstatement—at OpenAI, how AI threatens to contribute to disinformation, and the rapidly advancing technology’s future potential in a wide-ranging conversation with TIME Editor-in-Chief Sam Jacobs as part of TIME’s “A Year in TIME” event on Tuesday.

Follow us:

Follow us:

Sam Altman on OpenAI, Future Risks and Rewards, and Artificial General Intelligence

OpenAI CEO Sam Altman on the Future of AI

Open AI Founder Sam Altman on Artificial Intelligence's Future | Exponentially

Sam Altman: there’s no “magic red button” to stop AI

OpenAI CEO Sam Altman on the Future of AI

OpenAI CEO Sam Altman and CTO Mira Murati on the Future of AI and ChatGPT | WSJ Tech Live 2023

OpenAI CEO on the future of programming | Sam Altman and Lex Fridman

AI will take your job. — Sam Altman

OpenAI's Sam Altman's On 'Who will control the future of AI?' And His Four Polic...

Sam Altman: OpenAI CEO on GPT-4, ChatGPT, and the Future of AI | Lex Fridman Podcast #367

Sam Altman talks GPT-4o and Predicts the Future of AI

The Possibilities of AI [Entire Talk] - Sam Altman (OpenAI)

OpenAI CEO: When will AGI arrive? | Sam Altman and Lex Fridman

Which jobs will AI replace first? #openai #samaltman #ai

Elon Musk on Sam Altman and ChatGPT: I am the reason OpenAI exists

OpenAI Founder Sam Altman Gave Thousands Of People Free Money. Here’s What Happened.

OpenAI CEO on Artificial Intelligence Changing Society

Sam Altman Just REVEALED The Future Of AI..

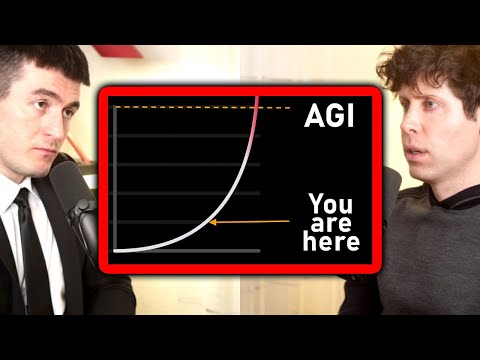

How Sam Altman Defines AGI

Sam Altman REVEALS the 'Future of AI'

OpenAI's Altman and Makanju on Global Implications of AI

OpenAI CEO Sam Altman and I talk about the future of #AI

ChatGPT creator is now worried about AI

Exploring the Future of OpenAI with the CEO Sam Altman

Комментарии

0:15:43

0:15:43

0:22:57

0:22:57

0:24:02

0:24:02

0:03:55

0:03:55

0:00:56

0:00:56

0:49:37

0:49:37

0:02:37

0:02:37

0:00:22

0:00:22

0:18:04

0:18:04

2:23:57

2:23:57

0:46:15

0:46:15

0:45:49

0:45:49

0:04:37

0:04:37

0:00:57

0:00:57

0:05:18

0:05:18

0:04:53

0:04:53

0:15:05

0:15:05

0:17:26

0:17:26

0:00:33

0:00:33

0:23:02

0:23:02

0:38:47

0:38:47

0:00:58

0:00:58

0:00:57

0:00:57

0:35:27

0:35:27