filmov

tv

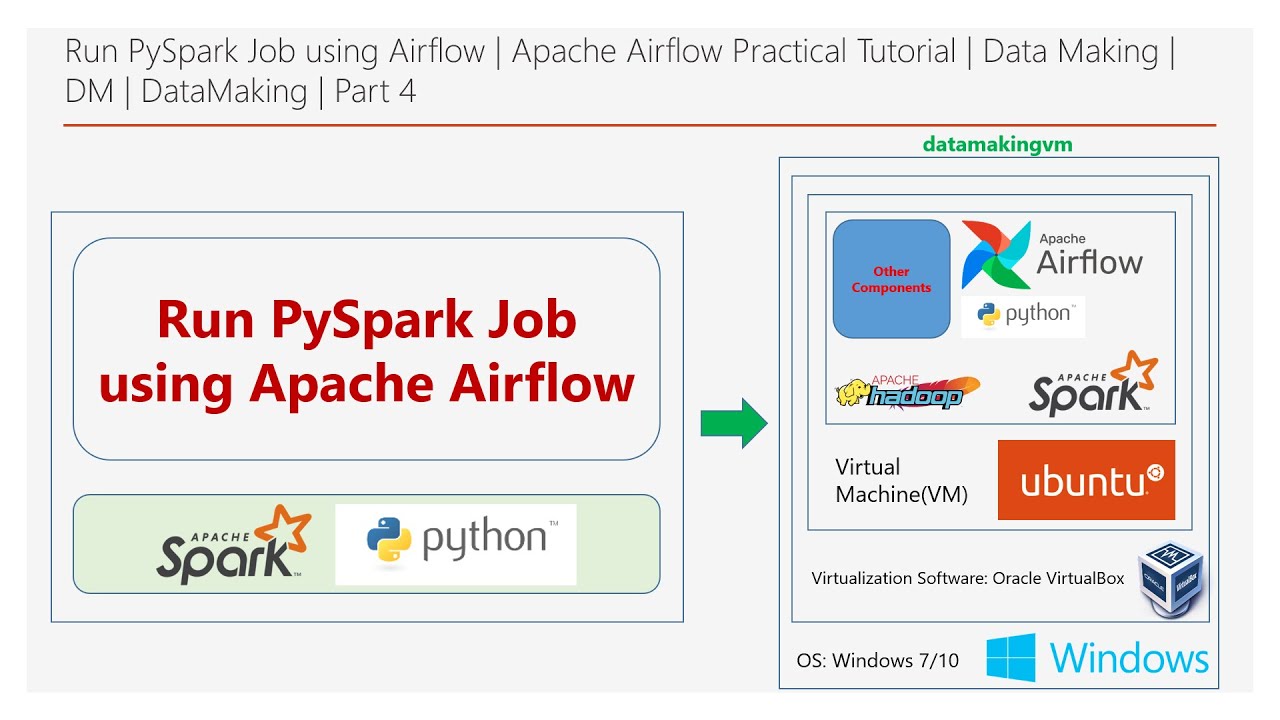

Run PySpark Job using Airflow | Apache Airflow Practical Tutorial |Part 4|Data Making|DM| DataMaking

Показать описание

Hi Friends, Good morning/evening.

Do you need a FREE Apache Spark and Hadoop VM for practice?

Happy Learning!

================================================================================

Do you need a FREE Apache Spark and Hadoop VM for practice?

Happy Learning!

================================================================================

Run PySpark Job using Airflow | Apache Airflow Practical Tutorial |Part 4|Data Making|DM| DataMaking

How to Submit a PySpark Script to a Spark Cluster Using Airflow!

How to Schedule and Run Spark Jobs Using Airflow!

Submitting PySpark Jobs to Databricks: ETL Workflows with Airflow Explained!

Run Spark Scala Job using Airflow | Apache Airflow Practical Tutorial | Part 5 | DM | DataMaking

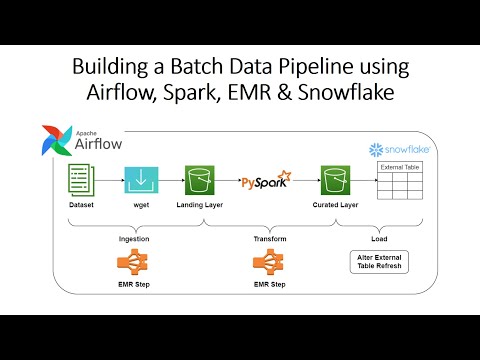

Building a Batch Data Pipeline using Airflow, Spark, EMR & Snowflake

How to Run Databricks Jobs from Airflow using Python!

Learn Apache Airflow in 10 Minutes | High-Paying Skills for Data Engineers

How to Orchestrate Databricks Jobs Using Airflow

Learn Apache Spark in 10 Minutes | Step by Step Guide

How to Schedule and Run Azure Synapse Spark Jobs Using Airflow!

Running EMR jobs with Airflow

How to build and automate your Python ETL pipeline with Airflow | Data pipeline | Python

Creating Your First Airflow DAG for External Python Scripts

Create a Dataproc cluster from Airflow - GCP

How to submit Spark jobs to EMR cluster from Airflow

How to Run Apache Beam Workflows Using Airflow!

How to Schedule and Run Snowflake Snowpark Jobs Using Airflow and a PythonVirtualEnvironment!

How to Build an ETL Analytics Pipeline With Databricks Using Airflow!

How to Run AWS Glue Jobs using Airflow!

Apache Airflow with Spark, Pyspark, Java, Scala for Data Engineers || Full Course

PySpark Tutorial

How to process big data workloads with spark on AWS EMR

Steps to run PySpark and Jupyter Notebook using Docker

Комментарии

0:16:37

0:16:37

0:10:04

0:10:04

0:00:58

0:00:58

0:19:07

0:19:07

0:12:35

0:12:35

0:41:11

0:41:11

0:00:54

0:00:54

0:12:38

0:12:38

0:15:47

0:15:47

0:10:47

0:10:47

0:00:43

0:00:43

0:10:31

0:10:31

0:11:30

0:11:30

0:08:26

0:08:26

0:09:09

0:09:09

0:14:38

0:14:38

0:00:55

0:00:55

0:05:08

0:05:08

0:19:08

0:19:08

0:00:55

0:00:55

1:08:39

1:08:39

1:49:02

1:49:02

0:36:09

0:36:09

0:01:37

0:01:37