filmov

tv

PCA with Python

Показать описание

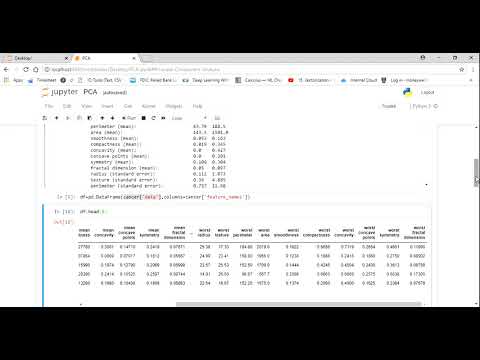

1) We explain about the important input and output parameters

2) We show how PCA can find components from linearly correlated data. We basically generate some data in this fashion. The first PC explains 97% of variability

3) We demonstrate using iris. We show how when we use first two principal components explain the three classes are well separated, however when we use the last the classes are complete chaos

4) We demonstrate using MNIST PCA, we also draw the cumulative variance explained graph which can help us select number of components

5) We demonstrate that 87 Components explain 90% of variability in the data (Original 784 Features) and the accuracy is effected by a mere 3.5% when trained using these features

6) We explain the different versions Randomized (More Efficient), Incremental (for Large Datasets using minibatch), Sparse (Interpret-able Components), Kernalized (Find non linear combinations)

0:11:37

0:11:37

0:24:09

0:24:09

0:12:30

0:12:30

0:21:04

0:21:04

0:16:11

0:16:11

0:06:05

0:06:05

0:06:28

0:06:28

0:12:16

0:12:16

0:07:37

0:07:37

0:19:56

0:19:56

0:16:28

0:16:28

0:29:12

0:29:12

0:20:01

0:20:01

0:18:33

0:18:33

0:07:56

0:07:56

0:17:17

0:17:17

0:21:58

0:21:58

0:24:38

0:24:38

0:12:32

0:12:32

0:01:01

0:01:01

0:08:49

0:08:49

0:00:47

0:00:47

0:10:56

0:10:56

0:00:06

0:00:06