filmov

tv

Designing Structured Streaming Pipelines—How to Architect Things Right - Tathagata Das Databricks

Показать описание

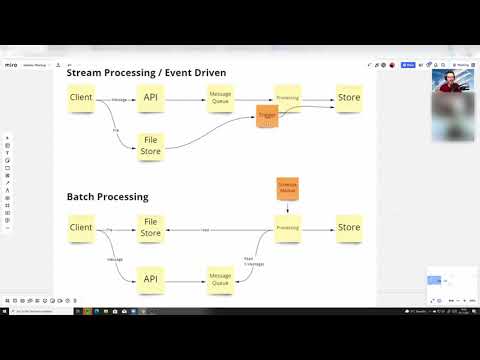

Structured Streaming has proven to be the best platform for building distributed stream processing applications. Its unified SQL/Dataset/DataFrame APIs and Spark's built-in functions make it easy for developers to express complex computations. However, expressing the business logic is only part of the larger problem of building end-to-end streaming pipelines that interact with a complex ecosystem of storage systems and workloads. It is important for the developer to truly understand the business problem needs to be solved.

What are you trying to consume? Single source? Joining multiple streaming sources? Joining streaming with static data?

What are you trying to produce? What is the final output that the business wants? What type of queries does the business want to run on the final output?

When do you want it? When does the business want to the data? What is the acceptable latency? Do you really want to millisecond-level latency?

How much are you willing to pay for it? This is the ultimate question and the answer significantly determines how feasible is it solve the above questions.

These are the questions that we ask every customer in order to help them design their pipeline. In this talk, I am going to go through the decision tree of designing the right architecture for solving your problem.

About: Databricks provides a unified data analytics platform, powered by Apache Spark™, that accelerates innovation by unifying data science, engineering and business.

Connect with us:

What are you trying to consume? Single source? Joining multiple streaming sources? Joining streaming with static data?

What are you trying to produce? What is the final output that the business wants? What type of queries does the business want to run on the final output?

When do you want it? When does the business want to the data? What is the acceptable latency? Do you really want to millisecond-level latency?

How much are you willing to pay for it? This is the ultimate question and the answer significantly determines how feasible is it solve the above questions.

These are the questions that we ask every customer in order to help them design their pipeline. In this talk, I am going to go through the decision tree of designing the right architecture for solving your problem.

About: Databricks provides a unified data analytics platform, powered by Apache Spark™, that accelerates innovation by unifying data science, engineering and business.

Connect with us:

Комментарии

0:40:51

0:40:51

0:38:28

0:38:28

0:09:37

0:09:37

0:09:02

0:09:02

0:29:57

0:29:57

0:13:37

0:13:37

0:10:34

0:10:34

0:06:48

0:06:48

0:08:29

0:08:29

0:05:24

0:05:24

0:05:49

0:05:49

0:14:30

0:14:30

0:18:12

0:18:12

0:39:19

0:39:19

0:18:10

0:18:10

0:00:36

0:00:36

1:04:00

1:04:00

0:02:17

0:02:17

0:41:30

0:41:30

0:10:05

0:10:05

0:17:44

0:17:44

0:06:03

0:06:03

0:05:50

0:05:50

0:08:27

0:08:27