filmov

tv

python nlp vectorization

Показать описание

Natural Language Processing (NLP) involves the analysis and manipulation of natural language data, such as text. One crucial step in many NLP tasks is converting text data into numerical vectors that can be used as input for machine learning models. In this tutorial, we'll explore how to perform NLP vectorization using Python, with a focus on the popular scikit-learn library.

Before we begin, make sure you have Python installed on your system. You can install the required libraries using the following commands:

Additionally, we'll use the Natural Language Toolkit (NLTK) for text processing, so make sure to download the NLTK data by running the following Python code:

First, let's perform some basic text preprocessing. We'll tokenize the text (split it into words), convert it to lowercase, and remove any punctuation.

Term Frequency-Inverse Document Frequency (TF-IDF) is a popular method for converting text data into numerical vectors. It measures the importance of a word in a document relative to its frequency in the entire corpus.

Let's implement TF-IDF vectorization using scikit-learn:

This code snippet demonstrates how to preprocess text, create a TF-IDF vectorizer, and transform the text data into a TF-IDF matrix.

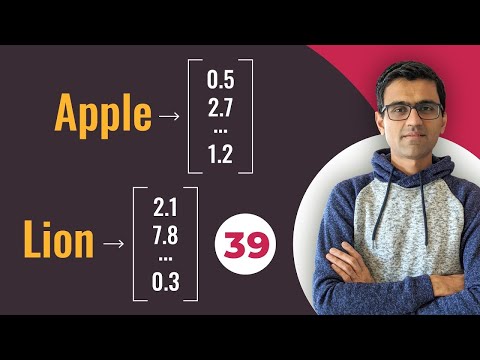

Word embeddings are dense vector representations of words, capturing semantic relationships. One popular technique for word embeddings is Word2Vec. We'll use the gensim library for this:

This code demonstrates how to train a Word2Vec model on tokenized sentences and access the word vectors.

These examples provide a basic understanding of NLP vectorization techniques using Python. Depending on your specific task and dataset, you may need to explore other methods and fine-tune parameters for optimal results.

ChatGPT

Before we begin, make sure you have Python installed on your system. You can install the required libraries using the following commands:

Additionally, we'll use the Natural Language Toolkit (NLTK) for text processing, so make sure to download the NLTK data by running the following Python code:

First, let's perform some basic text preprocessing. We'll tokenize the text (split it into words), convert it to lowercase, and remove any punctuation.

Term Frequency-Inverse Document Frequency (TF-IDF) is a popular method for converting text data into numerical vectors. It measures the importance of a word in a document relative to its frequency in the entire corpus.

Let's implement TF-IDF vectorization using scikit-learn:

This code snippet demonstrates how to preprocess text, create a TF-IDF vectorizer, and transform the text data into a TF-IDF matrix.

Word embeddings are dense vector representations of words, capturing semantic relationships. One popular technique for word embeddings is Word2Vec. We'll use the gensim library for this:

This code demonstrates how to train a Word2Vec model on tokenized sentences and access the word vectors.

These examples provide a basic understanding of NLP vectorization techniques using Python. Depending on your specific task and dataset, you may need to explore other methods and fine-tune parameters for optimal results.

ChatGPT

0:08:38

0:08:38

0:01:26

0:01:26

0:09:57

0:09:57

0:05:49

0:05:49

0:03:24

0:03:24

0:36:18

0:36:18

0:00:14

0:00:14

0:09:38

0:09:38

0:21:56

0:21:56

1:07:34

1:07:34

0:36:23

0:36:23

0:03:32

0:03:32

0:01:00

0:01:00

0:05:48

0:05:48

0:09:51

0:09:51

0:03:25

0:03:25

0:11:32

0:11:32

0:16:12

0:16:12

0:00:58

0:00:58

0:05:10

0:05:10

0:01:29

0:01:29

0:18:28

0:18:28

0:05:47

0:05:47

0:08:29

0:08:29