filmov

tv

How word vectors encode meaning

Показать описание

How word vectors encode meaning

Vectoring Words (Word Embeddings) - Computerphile

Word Embedding and Word2Vec, Clearly Explained!!!

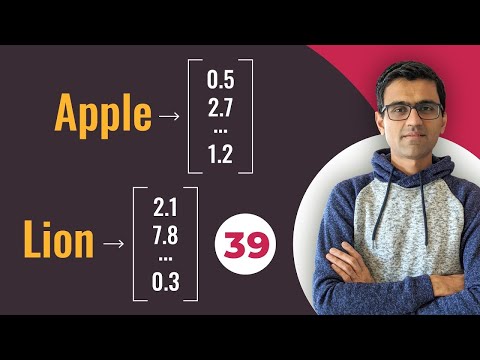

Converting words to numbers, Word Embeddings | Deep Learning Tutorial 39 (Tensorflow & Python)

What are Word Embeddings?

How large language models work, a visual intro to transformers | Chapter 5, Deep Learning

A Complete Overview of Word Embeddings

Word Embeddings || Embedding Layers || Quick Explained

ISA5810 Session 5 - NLP and Text Mining Part I

A Beginner's Guide to Vector Embeddings

Word Embeddings, Word2Vec And CBOW Indepth Intuition And Working- Part 1 | NLP For Machine Learning

Transformer Embeddings - EXPLAINED!

What is Word2Vec? A Simple Explanation | Deep Learning Tutorial 41 (Tensorflow, Keras & Python)

NLP Demystified 12: Capturing Word Meaning with Embeddings

12.1: What is word2vec? - Programming with Text

Lecture 2 | Word Vector Representations: word2vec

Word Embeddings & Positional Encoding in NLP Transformer model explained - Part 1

Quick explanation: One-hot encoding

Accelerated Natural Language Processing 3.2 - Word Vectors

What is Positional Encoding in Transformer?

Contextual word embeddings in spaCy

GloVe: Global Vector - Introduction | Glove Word - Word Co-Occurrence Matrix | NLP | #18

Jeffrey Pennington

Word Embeddings

Комментарии

0:01:00

0:01:00

0:16:56

0:16:56

0:16:12

0:16:12

0:11:32

0:11:32

0:08:38

0:08:38

0:27:14

0:27:14

0:17:17

0:17:17

0:02:09

0:02:09

2:32:04

2:32:04

0:08:29

0:08:29

0:47:45

0:47:45

0:15:43

0:15:43

0:18:28

0:18:28

0:42:47

0:42:47

0:10:20

0:10:20

1:18:17

1:18:17

0:21:31

0:21:31

0:01:43

0:01:43

0:07:02

0:07:02

0:00:57

0:00:57

0:09:06

0:09:06

0:09:32

0:09:32

0:23:29

0:23:29

0:14:28

0:14:28