filmov

tv

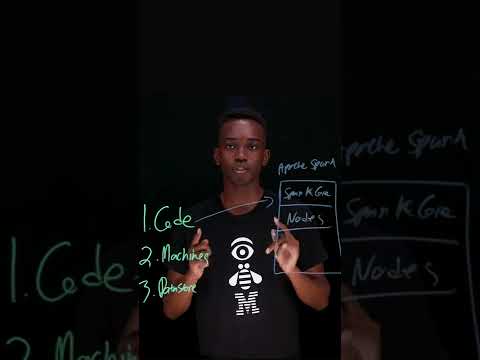

Apache Spark - Computerphile

Показать описание

Analysing big data stored on a cluster is not easy. Spark allows you to do so much more than just MapReduce. Rebecca Tickle takes us through some code.

This video was filmed and edited by Sean Riley.

This video was filmed and edited by Sean Riley.

Apache Spark - Computerphile

MapReduce - Computerphile

The HARDEST part about programming 🤦♂️ #code #programming #technology #tech #software #developer...

What Is Apache Spark?

Apache Spark in 60 Seconds

What exactly is Apache Spark? | Big Data Tools

What is Big Data? - Computerphile

Learn Apache Spark in 10 Minutes | Step by Step Guide

Apache Spark / PySpark Tutorial: Basics In 15 Mins

What Is Apache Spark? | Apache Spark Tutorial | Apache Spark For Beginners | Simplilearn

What is Apache Spark?

Spark Tutorial For Beginners | Big Data Spark Tutorial | Apache Spark Tutorial | Simplilearn

Apache Spark Optimization with @priyachauhan813 . Check the full video #apachespark

Spark Full Course | Spark Tutorial For Beginners | Learn Apache Spark | Simplilearn

Apache Spark? If only it worked. by Marcin Szymaniuk

Hadoop In 5 Minutes | What Is Hadoop? | Introduction To Hadoop | Hadoop Explained |Simplilearn

#09 | Snowpark Vs. Apache Spark | Will Spark Survive?

Apache Spark Architecture | Apache Spark Architecture Explained | Apache Spark Tutorial |Simplilearn

Google SWE teaches systems design | EP39: Apache Spark

Apache Spark Core—Deep Dive—Proper Optimization Daniel Tomes Databricks

Apache Spark Full Course | Apache Spark Tutorial For Beginners | Learn Spark In 7 Hours |Simplilearn

Apache Spark : Deep dive into the Java API for developers by Alexandre Dubreuil

What Is Apache Spark | Apache Spark Tutorial For Beginners | Simplilearn

What is Apache Spark? | Introduction to Apache Spark | Apache Spark Certification | Simplilearn

Комментарии

0:07:40

0:07:40

0:06:41

0:06:41

0:00:28

0:00:28

0:02:39

0:02:39

0:01:00

0:01:00

0:04:37

0:04:37

0:11:53

0:11:53

0:10:47

0:10:47

0:17:16

0:17:16

0:38:20

0:38:20

0:02:11

0:02:11

0:15:40

0:15:40

0:01:01

0:01:01

7:15:32

7:15:32

0:31:28

0:31:28

0:06:21

0:06:21

0:10:41

0:10:41

0:47:42

0:47:42

0:09:05

0:09:05

1:30:18

1:30:18

6:41:07

6:41:07

0:46:17

0:46:17

0:04:21

0:04:21

0:36:27

0:36:27