filmov

tv

Encoder-Decoder Architecture for Sequence to Sequence Tasks

Показать описание

Encoder-Decoder Architecture for Sequence to Sequence Tasks

💥💥 GET FULL SOURCE CODE AT THIS LINK 👇👇

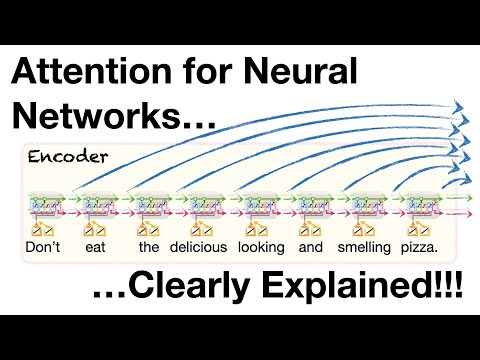

The Encoder-Decoder architecture is a fundamental concept in Natural Language Processing and Sequence to Sequence learning. In this video, we will explore the principles and components of the Encoder-Decoder architecture, and demonstrate how it can be applied to sequence to sequence tasks. The architecture is particularly useful for machine translation, text summarization, and other applications where the input and output sequences have different lengths and structures.

The Encoder component is responsible for converting the input sequence into a fixed-length representation, called the encoder output. This is typically achieved through a combination of recurrent neural networks (RNNs) and self-attention mechanisms. The Decoder component, on the other hand, is responsible for generating the output sequence, one step at a time. This is typically achieved through a combination of RNNs and attention mechanisms.

We will also explore the challenges and limitations of the Encoder-Decoder architecture, and discuss some potential solutions to overcome these limitations.

Additional Resources:

* Sequence to Sequence Learning with Neural Networks: A survey paper by Sutskever et al., 2014

* Neural Machine Translation by Encoder-Decoder Optimization with Stochastic Weight Dropout: A paper by Jean et al., 2015

#stem #NLP #MachineLearning #SequenceToSequence #EncoderDecoder #DeepLearning #NeuralNetworks #MachineTranslation

Find this and all other slideshows for free on our website:

💥💥 GET FULL SOURCE CODE AT THIS LINK 👇👇

The Encoder-Decoder architecture is a fundamental concept in Natural Language Processing and Sequence to Sequence learning. In this video, we will explore the principles and components of the Encoder-Decoder architecture, and demonstrate how it can be applied to sequence to sequence tasks. The architecture is particularly useful for machine translation, text summarization, and other applications where the input and output sequences have different lengths and structures.

The Encoder component is responsible for converting the input sequence into a fixed-length representation, called the encoder output. This is typically achieved through a combination of recurrent neural networks (RNNs) and self-attention mechanisms. The Decoder component, on the other hand, is responsible for generating the output sequence, one step at a time. This is typically achieved through a combination of RNNs and attention mechanisms.

We will also explore the challenges and limitations of the Encoder-Decoder architecture, and discuss some potential solutions to overcome these limitations.

Additional Resources:

* Sequence to Sequence Learning with Neural Networks: A survey paper by Sutskever et al., 2014

* Neural Machine Translation by Encoder-Decoder Optimization with Stochastic Weight Dropout: A paper by Jean et al., 2015

#stem #NLP #MachineLearning #SequenceToSequence #EncoderDecoder #DeepLearning #NeuralNetworks #MachineTranslation

Find this and all other slideshows for free on our website:

0:16:50

0:16:50

0:07:54

0:07:54

0:13:22

0:13:22

0:02:09

0:02:09

0:09:49

0:09:49

0:30:03

0:30:03

0:06:47

0:06:47

0:05:25

0:05:25

0:19:54

0:19:54

1:13:42

1:13:42

0:05:33

0:05:33

0:07:38

0:07:38

0:05:00

0:05:00

0:00:57

0:00:57

0:06:20

0:06:20

0:29:12

0:29:12

0:16:08

0:16:08

0:06:27

0:06:27

0:05:51

0:05:51

0:15:01

0:15:01

0:59:18

0:59:18

0:15:51

0:15:51

0:29:17

0:29:17

0:12:58

0:12:58