filmov

tv

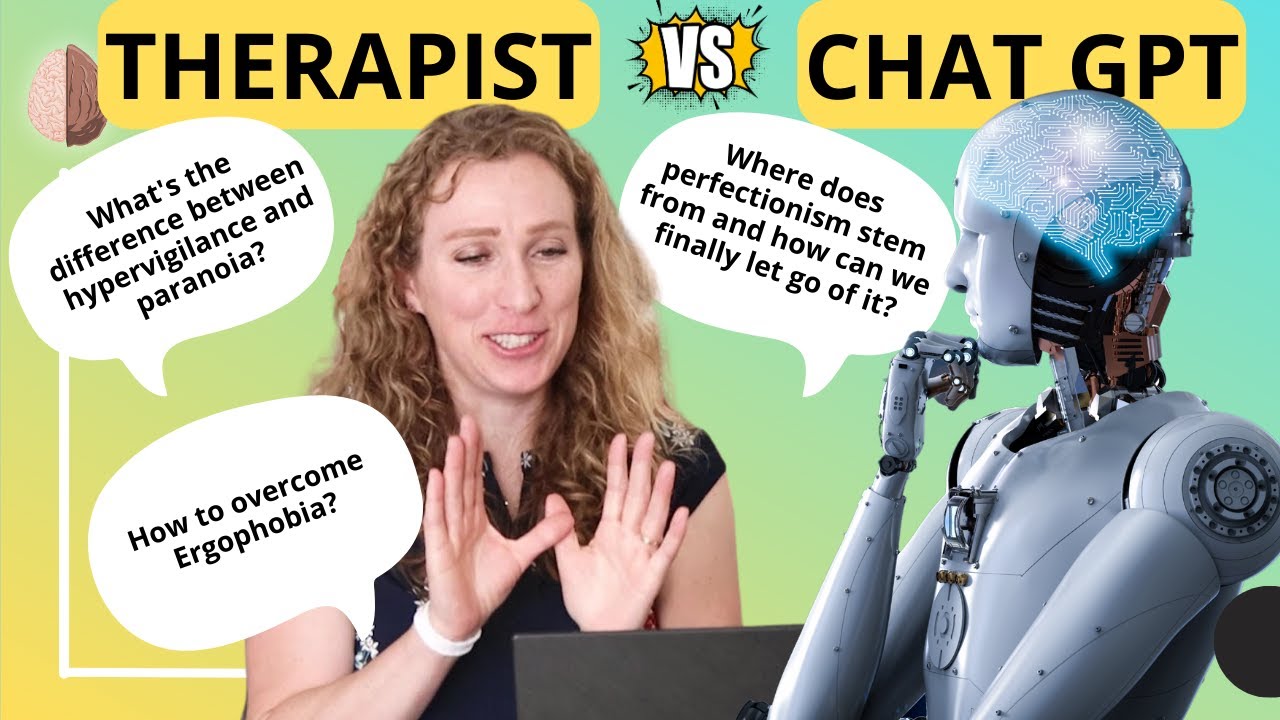

Therapist vs. Artificial Intelligence - I answer your questions #chatgpt #mentalhealth

Показать описание

Can Chat GPT handle complex questions about mental health? Could Artificial Intelligence do a therapist's job someday?

Let’s find out.

Artificial Intelligence and Chat GPT has taken over and it’s pretty incredible, so far it’s helped me figure out Excel formulas, helped my assistant write emails, summarized videos, and suggested topics for future videos. It’s a super powerful tool, and my husband is also obsessed with it.

I’ve asked you all to submit some questions and I’m going to try to answer them, then we’ll ask Chat GPT for it’s opinion and Today I’ve got my friend, colleague and boss with me Monica Blume, LCSW, and she’s going to be the judge of which of us did a better job. And throw out her suggestions for what the treatment would be.

For the sake of time we’ll try to outline a treatment approach if the answer to the question is too long for this video.

Therapy in a Nutshell and the information provided by Emma McAdam are solely intended for informational and entertainment purposes and are not a substitute for advice, diagnosis, or treatment regarding medical or mental health conditions. Although Emma McAdam is a licensed marriage and family therapist, the views expressed on this site or any related content should not be taken for medical or psychiatric advice. Always consult your physician before making any decisions related to your physical or mental health.

In therapy I use a combination of Acceptance and Commitment Therapy, Systems Theory, positive psychology, and a bio-psycho-social approach to treating mental illness and other challenges we all face in life. The ideas from my videos are frequently adapted from multiple sources. Many of them come from Acceptance and Commitment Therapy, especially the work of Steven Hayes, Jason Luoma, and Russ Harris. The sections on stress and the mind-body connection derive from the work of Stephen Porges (the Polyvagal theory), Peter Levine (Somatic Experiencing) Francine Shapiro (EMDR), and Bessel Van Der Kolk. I also rely heavily on the work of the Arbinger institute for my overall understanding of our ability to choose our life's direction.

Copyright Therapy in a Nutshell, LLC

Комментарии

0:18:44

0:18:44

0:09:54

0:09:54

0:18:46

0:18:46

0:06:18

0:06:18

0:05:51

0:05:51

0:00:56

0:00:56

0:01:00

0:01:00

0:00:29

0:00:29

0:06:06

0:06:06

0:15:04

0:15:04

0:01:19

0:01:19

0:03:52

0:03:52

0:10:20

0:10:20

0:02:36

0:02:36

0:00:43

0:00:43

0:08:33

0:08:33

0:21:34

0:21:34

0:10:32

0:10:32

0:12:51

0:12:51

0:04:19

0:04:19

0:59:44

0:59:44

0:04:32

0:04:32

0:06:40

0:06:40

0:10:31

0:10:31