filmov

tv

Synthetic Gradients Explained

Показать описание

DeepMind released an optimization strategy that could become the most popular approach for training very deep neural networks, even more so than backpropagation. I don't think it got enough love, so i'm going to explain how it works myself and why i think it's so cool. Already know how backpropagation works? Skip to 14:10

Code for this video:

Please Subscribe! And like. And comment. Thats what keeps me going.

Follow me on:

Snapchat: @llSourcell

More learning resources:

Join us in the Wizards Slack channel:

Signup for my newsletter for exciting updates in the field of AI:

Code for this video:

Please Subscribe! And like. And comment. Thats what keeps me going.

Follow me on:

Snapchat: @llSourcell

More learning resources:

Join us in the Wizards Slack channel:

Signup for my newsletter for exciting updates in the field of AI:

Synthetic Gradients Explained

Synthetic Gradients Tutorial - How to Speed Up Deep Learning Training

Synthetic Gradients – Decoupling Layers of a Neural Nets: Anuj Gupta

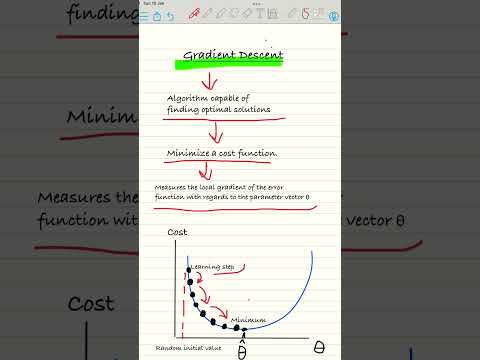

Gradient Descent in 3 minutes

Gradient descent, how neural networks learn | DL2

Gradient Descent Explained

| colourful liquid density gradient | layers of liquid in glass |Awesome science experiment

Gradient Descent Machine Learning

Vanishing & Exploding Gradient explained | A problem resulting from backpropagation

Mastering Gradient Descent | The Heart of Machine Learning Algorithms Explained

Neural Networks explained in 60 seconds!

Learning to Generate 3D Training Data Through Hybrid Gradient

What is Gradient Descent in Machine Learning?

An Old Problem - Ep. 5 (Deep Learning SIMPLIFIED)

Tutorial 7- Vanishing Gradient Problem

Gradient Boosting Explained #datascience #machinelearning #statistics #boosting #math

Backpropagation, step-by-step | DL3

Gradient descent simple explanation|gradient descent machine learning|gradient descent algorithm

Exploding Gradients Explained: The Hidden Danger in Deep Learning!

Gradient Descent|Machine Learning

Model interpretability with Integrated Gradients - Keras Code Examples

Gradient Descent Explained: How It Works and Finds the Minimum of Complex Functions #CodeMonarch

AI Explained Video Series - Learn about Explainable AI and MLOps: What are Integrated Gradients?

AI Explained – Gradient Descent | A Machine Learning Tool

Комментарии

0:27:16

0:27:16

0:20:25

0:20:25

0:41:19

0:41:19

0:03:06

0:03:06

0:20:33

0:20:33

0:07:05

0:07:05

0:00:16

0:00:16

0:00:52

0:00:52

0:07:43

0:07:43

0:00:50

0:00:50

0:01:00

0:01:00

0:01:01

0:01:01

0:00:53

0:00:53

0:05:25

0:05:25

0:14:30

0:14:30

0:00:52

0:00:52

0:12:47

0:12:47

0:15:39

0:15:39

0:00:09

0:00:09

0:00:39

0:00:39

0:03:29

0:03:29

0:01:00

0:01:00

0:09:39

0:09:39

0:03:21

0:03:21