filmov

tv

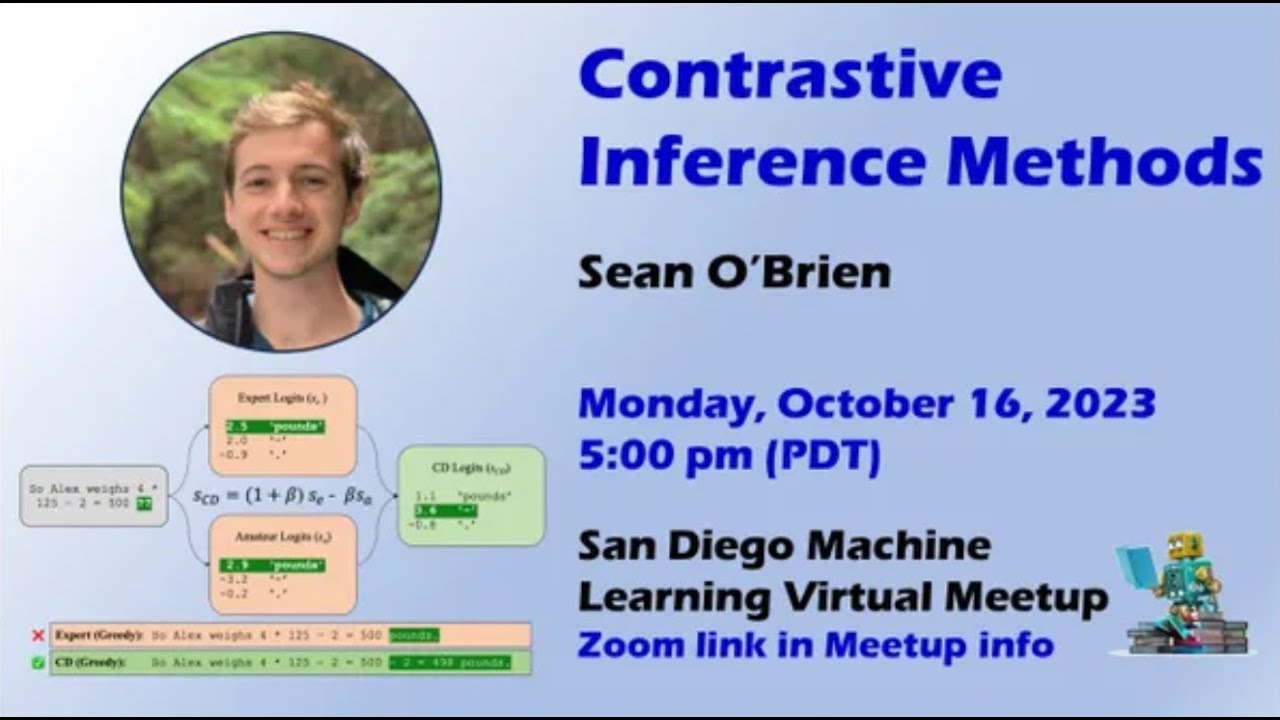

Contrastive Inference Methods

Показать описание

Contrastive Inference Methods

Sean O'Brien

The rapidly increasing scale of large language models (LLMs) and their training corpora has led to remarkable improvements in fluency, reasoning, and information recall. Still, these models are prone to hallucination and fundamental reasoning errors, and reliably eliciting desired behaviors from them can be challenging. The development of strategies like chain-of-thought and self-consistency have since demonstrated that training-free techniques can extract better behavior from existing models, launching a wave of research into prompting techniques. In this talk, we will examine a separate, emerging class of training-free techniques also intended to better control LLM behavior, which can be called contrastive inference methods. These techniques achieve improvements across a number of tasks by exploiting the behavioral differences between two different inference processes; in the case of contrastive decoding, between a large “expert” model and a smaller “amateur” model. While this talk will mostly focus on contrastive decoding, it will also introduce similar methods and discuss applications beyond the domain of text generation.

Sean O’Brien is a first-year Ph.D. student at UC San Diego, advised by Julian McAuley. In the past, he has researched dark matter search methods, chess strategy, and language model reasoning at UC Berkeley and Meta AI. Now he is interested in developing more reliable decoding methods for large language models, as well as exploring the application of these techniques to goal-oriented dialogue systems.

* Please make sure to read the instructions for joining the event below.

Agenda:

5:00 - 5:05 pm -- Arrival

5:05 - 6:00 pm -- Presentation and discussion

6:00 - Time permitting -- Additional Q&A

Paper links:

Contrastive Decoding: Open-ended Text Generation as Optimization

Contrastive Decoding Improves Reasoning in Large Language Models

Surfacing Biases in Large Language Models using Contrastive Input Decoding

DoLa: Decoding by Contrasting Layers Improves Factuality in Large Language Models

Location:

Please Note: There are two steps required to join this online meetup:

You must go to our Slack community and ask for the password for the meeting. Link to join is below.

You must have a Zoom login in order to join the event. A free Zoom account will work. If you get an error message joining the Zoom, please login to your account on the Zoom website then try again.

Community:

Join our slack channel for questions and discussion about what's new in ML:

Sean O'Brien

The rapidly increasing scale of large language models (LLMs) and their training corpora has led to remarkable improvements in fluency, reasoning, and information recall. Still, these models are prone to hallucination and fundamental reasoning errors, and reliably eliciting desired behaviors from them can be challenging. The development of strategies like chain-of-thought and self-consistency have since demonstrated that training-free techniques can extract better behavior from existing models, launching a wave of research into prompting techniques. In this talk, we will examine a separate, emerging class of training-free techniques also intended to better control LLM behavior, which can be called contrastive inference methods. These techniques achieve improvements across a number of tasks by exploiting the behavioral differences between two different inference processes; in the case of contrastive decoding, between a large “expert” model and a smaller “amateur” model. While this talk will mostly focus on contrastive decoding, it will also introduce similar methods and discuss applications beyond the domain of text generation.

Sean O’Brien is a first-year Ph.D. student at UC San Diego, advised by Julian McAuley. In the past, he has researched dark matter search methods, chess strategy, and language model reasoning at UC Berkeley and Meta AI. Now he is interested in developing more reliable decoding methods for large language models, as well as exploring the application of these techniques to goal-oriented dialogue systems.

* Please make sure to read the instructions for joining the event below.

Agenda:

5:00 - 5:05 pm -- Arrival

5:05 - 6:00 pm -- Presentation and discussion

6:00 - Time permitting -- Additional Q&A

Paper links:

Contrastive Decoding: Open-ended Text Generation as Optimization

Contrastive Decoding Improves Reasoning in Large Language Models

Surfacing Biases in Large Language Models using Contrastive Input Decoding

DoLa: Decoding by Contrasting Layers Improves Factuality in Large Language Models

Location:

Please Note: There are two steps required to join this online meetup:

You must go to our Slack community and ask for the password for the meeting. Link to join is below.

You must have a Zoom login in order to join the event. A free Zoom account will work. If you get an error message joining the Zoom, please login to your account on the Zoom website then try again.

Community:

Join our slack channel for questions and discussion about what's new in ML:

0:56:30

0:56:30

![[short] Contrastive Decoding](https://i.ytimg.com/vi/NIaw0CY1_lM/hqdefault.jpg) 0:02:34

0:02:34

1:46:17

1:46:17

![[full] Contrastive Decoding](https://i.ytimg.com/vi/nMR56TkwC1Q/hqdefault.jpg) 0:16:23

0:16:23

0:48:07

0:48:07

0:14:54

0:14:54

0:07:03

0:07:03

0:03:34

0:03:34

0:02:34

0:02:34

0:05:31

0:05:31

1:08:19

1:08:19

0:49:03

0:49:03

0:30:07

0:30:07

0:58:37

0:58:37

0:16:13

0:16:13

0:38:54

0:38:54

0:39:36

0:39:36

1:05:46

1:05:46

0:00:37

0:00:37

0:53:23

0:53:23

0:30:37

0:30:37

0:04:30

0:04:30

0:58:04

0:58:04

0:36:09

0:36:09