filmov

tv

Why The ZFS Copy On Write File System Is Better Than A Journaling One

Показать описание

Article Referenced: Understanding ZFS storage and performance

CULT OF ZFS Shirts Available

Connecting With Us

---------------------------------------------------

Lawrence Systems Shirts and Swag

---------------------------------------------------

AFFILIATES & REFERRAL LINKS

---------------------------------------------------

Amazon Affiliate Store

UniFi Affiliate Link

All Of Our Affiliates that help us out and can get you discounts!

Gear we use on Kit

Use OfferCode LTSERVICES to get 5% off your order at

Digital Ocean Offer Code

HostiFi UniFi Cloud Hosting Service

Protect you privacy with a VPN from Private Internet Access

Patreon

⏱️ Timestamps ⏱️

00:00 File Systems Fundamentals

01:00 Journmaling Systems

02:00 COW

03:30 Copy On Write Visuals

07:49 ZFS with Single Drives

CULT OF ZFS Shirts Available

Connecting With Us

---------------------------------------------------

Lawrence Systems Shirts and Swag

---------------------------------------------------

AFFILIATES & REFERRAL LINKS

---------------------------------------------------

Amazon Affiliate Store

UniFi Affiliate Link

All Of Our Affiliates that help us out and can get you discounts!

Gear we use on Kit

Use OfferCode LTSERVICES to get 5% off your order at

Digital Ocean Offer Code

HostiFi UniFi Cloud Hosting Service

Protect you privacy with a VPN from Private Internet Access

Patreon

⏱️ Timestamps ⏱️

00:00 File Systems Fundamentals

01:00 Journmaling Systems

02:00 COW

03:30 Copy On Write Visuals

07:49 ZFS with Single Drives

Why The ZFS Copy On Write File System Is Better Than A Journaling One

How Much Memory Does ZFS Need and Does It Have To Be ECC?

What Is ZFS?: A Brief Primer

'The ZFS filesystem' - Philip Paeps (LCA 2020)

Unix & Linux: How does ZFS copy on write work for large files? (2 Solutions!!)

Tech Tip Tuesday - ZFS Snapshots

ZFS File System on Linux Ubuntu and Its Key Advantages

OpenZFS Basics by Matt Ahrens and George Wilson

ZFS for Newbies

Beginner's guide to ZFS. Part 19: Cloning Datasets

DirectIO for ZFS by Brian Atkinson

What is ZFS and Why Should You Use it?

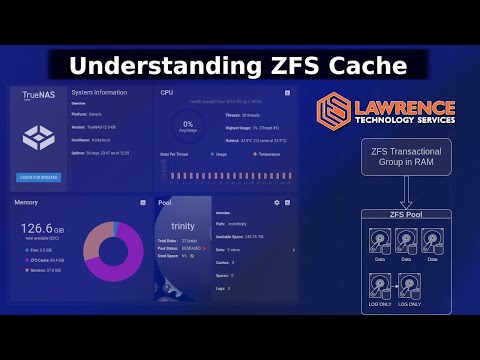

Explaining ZFS LOG and L2ARC Cache: Do You Need One and How Do They Work?

ZFS 101: Leveraging Datasets and Zvols for Better Data Management

4 MAJOR LINUX FILE SYSTEM: EXT4 , XFS, ZFS, BTRFS

DevOps & SysAdmins: ZFS copy on write

More about ZFS - datasets and zvols!

Fix slow ZFS, get More IOPS! Best Practices for TrueNAS

Beginner's guide to ZFS. Part 20: Sending and Receiving Datasets as streams

Zettabyte File System {ZFS} Explained {Computer Wednesday Ep226 }

File sharing on Windows is bad this is how to make it better

How To Use TrueNAS ZFS Snapshots For Ransomware Protection & VSS Shadow Copies

Copying files to zfs mountpoint doesn't work - the files aren't actually copied to the oth...

problame: An Introduction to ZFS

Комментарии

0:10:51

0:10:51

0:06:59

0:06:59

0:31:50

0:31:50

0:43:50

0:43:50

0:01:50

0:01:50

0:02:56

0:02:56

0:14:11

0:14:11

1:27:46

1:27:46

0:34:35

0:34:35

0:09:34

0:09:34

0:39:48

0:39:48

0:09:48

0:09:48

0:25:08

0:25:08

0:14:17

0:14:17

0:16:02

0:16:02

0:02:35

0:02:35

0:21:23

0:21:23

0:11:45

0:11:45

0:10:11

0:10:11

0:27:22

0:27:22

0:11:32

0:11:32

0:20:31

0:20:31

0:01:53

0:01:53

1:18:59

1:18:59