filmov

tv

Design a High-Throughput Logging System | System Design

Показать описание

Logging systems are commonly found in large systems with multiple moving parts. For these high-throughput real-time systems, there are a number of challenges and considerations at scale. This video gives a high-level introduction to some of these challenges and how to overcome them.

Table of Contents:

0:00 - Introduction

0:27 - Requirements

1:33 - Naive Solution

2:18 - Sharding

3:07 - Bucketing

4:15 - Sharding and Bucketing Combined

5:05 - Migrating to Cold Storage

7:00 - Next Steps

Socials:

Design a High-Throughput Logging System | System Design

Distributed Logging System Design | Distributed Logging in Microservices | Systems Design Interview

design a high throughput logging system system design

System Design: Logging Service (5+ Approaches)

Distributed Metrics/Logging Design Deep Dive with Google SWE! | Systems Design Interview Question 14

27: High Throughput Stock Exchange | Systems Design Interview Questions With Ex-Google SWE

Scalable and Reliable Logging at Pinterest (DataEngConf SF16)

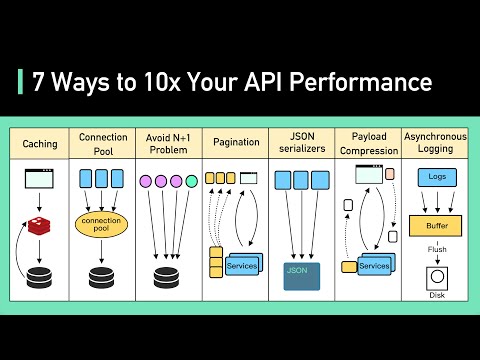

Top 7 Ways to 10x Your API Performance

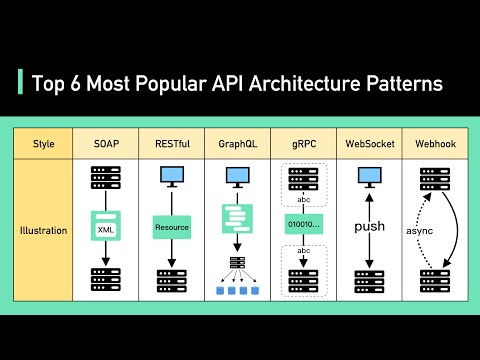

Top 6 Most Popular API Architecture Styles

High Throughput with Low Resource Usage: A Logging Journey - Eduardo Silva, Calyptia

How To Choose The Right Database?

Cache Systems Every Developer Should Know

SREcon22 Europe/Middle East/Africa - How We Implemented High Throughput Logging at Spotify

Murron: Reliable Logging Pipeline | Slack

Adventures in High Performance Logging | Ryan Resella | Code BEAM V

Kafka in 100 Seconds

System Design: Why is Kafka fast?

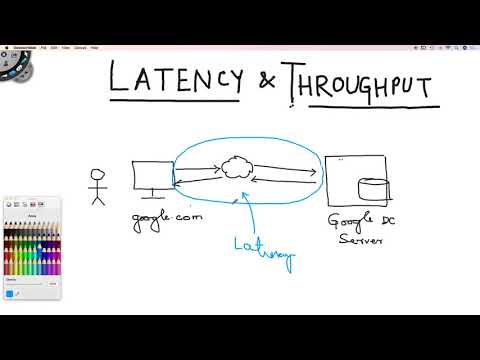

Latency vs Throughput

Design Instagram System Design Battle with 3 FAANG Staff Engineers

Automated high-throughput Wannierisation - Giovanni Pizzi

Fastest Automatic Firewood Processing Machine | Dangerous Big Chainsaw Cutting Tree machines ▶3

Fluentd and Docker Infrastructure

SREcon17 Europe/Middle East/Africa - OK Log: Distributed and Coördination-Free Logging

High-Performance Analytics with Probabilistic Data Structures: the Power of HyperLogLog

Комментарии

0:08:23

0:08:23

0:15:00

0:15:00

0:07:42

0:07:42

1:19:49

1:19:49

0:21:32

0:21:32

0:20:52

0:20:52

0:56:52

0:56:52

0:06:05

0:06:05

0:04:21

0:04:21

0:35:05

0:35:05

0:06:58

0:06:58

0:05:48

0:05:48

0:14:03

0:14:03

0:34:17

0:34:17

0:34:42

0:34:42

0:02:35

0:02:35

0:05:02

0:05:02

0:02:28

0:02:28

0:45:24

0:45:24

0:58:32

0:58:32

0:35:48

0:35:48

0:41:48

0:41:48

0:44:33

0:44:33

0:21:47

0:21:47