filmov

tv

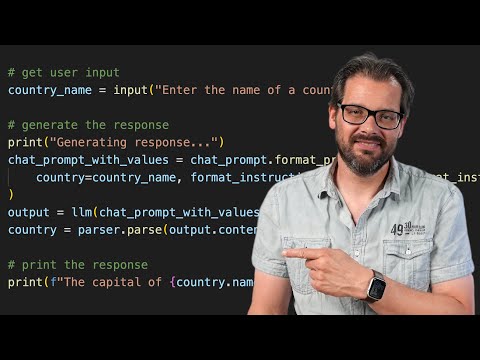

LangChain 🦜️ - COMPLETE TUTORIAL - Basics to advanced concept!

Показать описание

Timestamps:

0:00 Course Overview

1:24 Talk to the OpenAI API without langchain

5:04 Models, Prompts, Chains and Parser Basics

12:16 Chains: SequentialChains and RouterChains

21:11 Memory - Buffer vs. Summary

24:50 Index - Loaders, Splitters, VectorDBs, RetrievalChains

32:44 Agents - LLms plus Tools

39:00 How ChatGPT Plugins work

41:35 Evaluation - Use LLMs to evaluate LLMs

#langchain , #chatgpt

LangChain 🦜️ - COMPLETE TUTORIAL - Basics to advanced concept!

LangChain Explained in 13 Minutes | QuickStart Tutorial for Beginners

Complete Langchain Course For Generative AI In 3 Hours

LangChain Master Class For Beginners 2024 [+20 Examples, LangChain V0.2]

Learn RAG From Scratch – Python AI Tutorial from a LangChain Engineer

LangChain Crash Course for Beginners

LangChain Crash Course For Beginners | LangChain Tutorial

What is LangChain?

LangChain Complete Tutorial ,Part :1

Complete Langchain GEN AI Crash Course With 6 End To End LLM Projects With OPENAI,LLAMA2,Gemini Pro

Learn LangChain in 7 Easy Steps - Full Interactive Beginner Tutorial

LangChain Crash Course: Build a AutoGPT app in 25 minutes!

LangChain Tutorial for Beginners | Generative AI Series 🔥

Generative AI Full Course – Gemini Pro, OpenAI, Llama, Langchain, Pinecone, Vector Databases & M...

LangChain is AMAZING | Quick Python Tutorial

LangChain GEN AI Tutorial – 6 End-to-End Projects using OpenAI, Google Gemini Pro, LLAMA2

LangChain Explained In 15 Minutes - A MUST Learn For Python Programmers

What is LangChain? 101 Beginner's Guide Explained with Animations

Complete @LangChain Essential in 1 shot | LangChain Core | LangServe | LangGraph | LangSmith | Agent

The LangChain Cookbook - Beginner Guide To 7 Essential Concepts

Try LangChain with Python and Upstash Vector

RAG + Langchain Python Project: Easy AI/Chat For Your Docs

Development with Large Language Models Tutorial – OpenAI, Langchain, Agents, Chroma

LangChain Basics Tutorial #1 - LLMs & PromptTemplates with Colab

Комментарии

0:44:24

0:44:24

0:12:44

0:12:44

3:13:35

3:13:35

3:17:51

3:17:51

2:33:11

2:33:11

1:05:30

1:05:30

0:46:07

0:46:07

0:08:08

0:08:08

10:26:56

10:26:56

4:01:32

4:01:32

0:41:37

0:41:37

0:27:28

0:27:28

1:04:46

1:04:46

6:18:02

6:18:02

0:17:42

0:17:42

4:01:41

4:01:41

0:15:39

0:15:39

0:11:01

0:11:01

1:55:49

1:55:49

0:38:11

0:38:11

2:12:17

2:12:17

0:16:42

0:16:42

2:02:54

2:02:54

0:19:39

0:19:39