filmov

tv

Text Classification - Natural Language Processing With Python and NLTK p.11

Показать описание

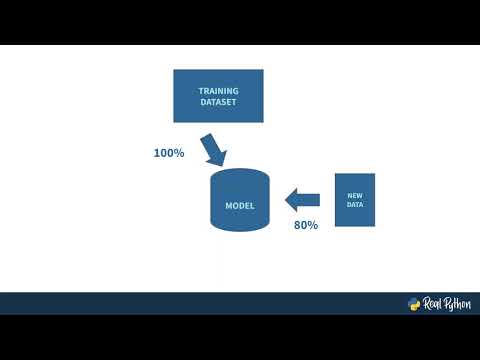

Now that we understand some of the basics of of natural language processing with the Python NLTK module, we're ready to try out text classification. This is where we attempt to identify a body of text with some sort of label.

To start, we're going to use some sort of binary label. Examples of this could be identifying text as spam or not, or, like what we'll be doing, positive sentiment or negative sentiment.

To start, we're going to use some sort of binary label. Examples of this could be identifying text as spam or not, or, like what we'll be doing, positive sentiment or negative sentiment.

Text Classification - Natural Language Processing With Python and NLTK p.11

Text Classification Explained | Sentiment Analysis Example | Deep Learning Applications | Edureka

Natural Language Processing: Mastering Text Classification with NLP- Comprehensive Beginner's G...

fastText tutorial | Text Classification Using fastText | NLP Tutorial For Beginners - S2 E13

Natural Language Processing (NLP) and Text Classification With Python

Natural Language Processing: Text classification | Data Science Summer School

Using AutoML Natural Language for custom text classification

NLP - Text Preprocessing and Text Classification (using Python)

What is Generative AI | Most Demanding Skills in 2024| Logicmojo Data Science

Text Classification with Python: Build and Compare Three Text Classifiers

Text Classification with Word Embeddings

Natural Language Processing with spaCy & Python - Course for Beginners

Text Classification with Python | Natural Language Processing Course Part 5| Scikit Learn / sklearn

Natural Language Processing from Scratch - Bag of Words Model for Text Classification

8. Text Classification Using Convolutional Neural Networks

TensorFlow Tutorial 11 - Text Classification - NLP Tutorial

Complete Natural Language Processing (NLP) Tutorial in Python! (with examples)

Text Classification Using Naive Bayes | Naive Bayes Algorithm In Machine Learning | Simplilearn

Text Classification With Python

Text Classification Natural Language Processing With Python and NLTK p 11

Natural Language Processing Explained for Beginners-Text Classification using NLP

Natural Language Processing(Text Classification)

Natural Language Processing Text Classification

Text Classification | NLP Lecture 6 | End to End | Average Word2Vec

Комментарии

0:11:41

0:11:41

0:03:03

0:03:03

0:03:41

0:03:41

0:20:59

0:20:59

0:13:16

0:13:16

4:07:05

4:07:05

0:05:56

0:05:56

0:14:31

0:14:31

0:04:14

0:04:14

0:29:18

0:29:18

0:04:33

0:04:33

3:02:33

3:02:33

0:04:15

0:04:15

2:16:49

2:16:49

0:16:28

0:16:28

0:23:17

0:23:17

1:37:46

1:37:46

0:20:15

0:20:15

0:38:47

0:38:47

0:11:41

0:11:41

0:23:15

0:23:15

0:18:23

0:18:23

0:14:54

0:14:54

0:52:11

0:52:11