filmov

tv

Deep Learning hardware acceleration with AWS Inferentia

Показать описание

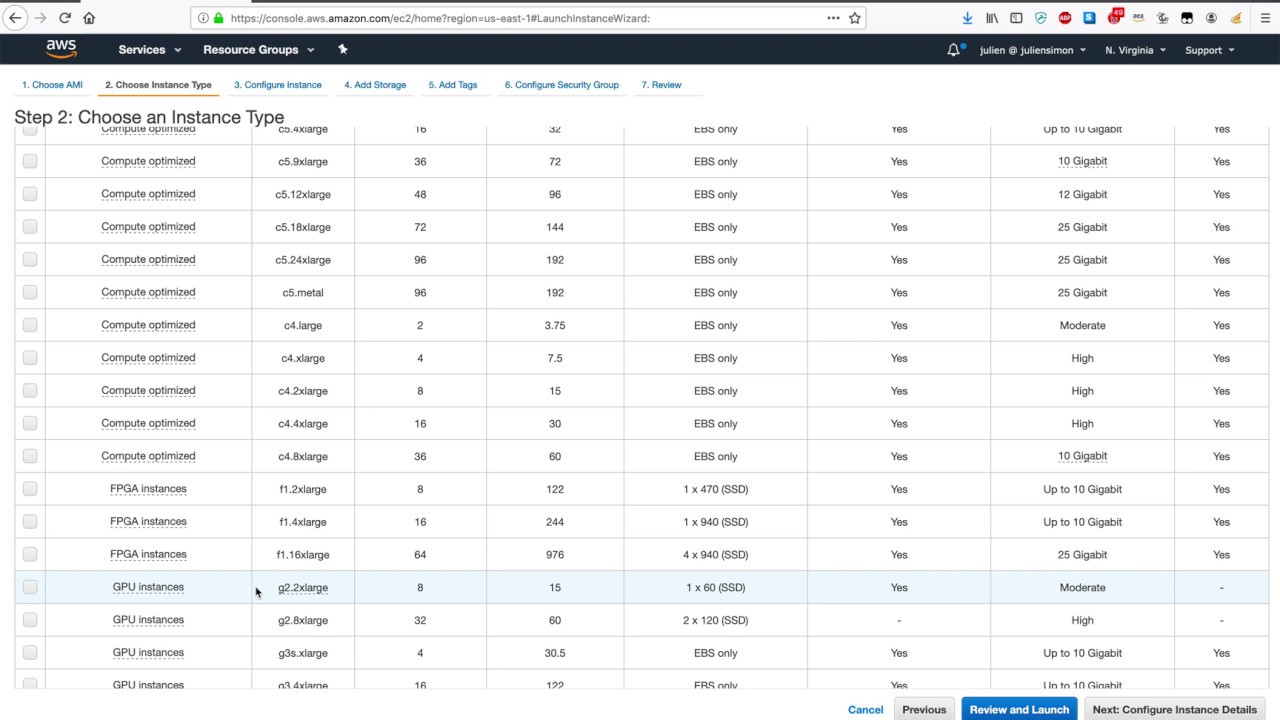

In this video, I show you how to get started with AWS Inferentia, and Amazon EC2 Inf1 instances.

Follow me on:

Follow me on:

Deep Learning hardware acceleration with AWS Inferentia

Hardware Accelerator for Convolutional Neural Network

Hardware acceleration for on-device Machine Learning

Nvidia CUDA in 100 Seconds

Hardware Acceleration for AI Workloads

A Systematic Approach To Designing AI Accelerator Hardware

Lecture 11 - Hardware Acceleration

Deep Neural Network Hardware Accelerator on FPGA

SLIDE: Smart Algorithms over Hardware Acceleration for Large-Scale Deep Learning with Beidi Chen...

[REFAI Seminar 10/24/22] Towards Functional Safety of Deep Learning Hardware Accelerators

Hardware acceleration of CNN on FPGA using opencl - part2

tinyML Summit 2022: Next-Generation Deep-Learning Accelerators: From Hardware to System

The AI Hardware Problem

All about AI Accelerators: GPU, TPU, Dataflow, Near-Memory, Optical, Neuromorphic & more (w/ Aut...

ICCKE 2022 - Financial Market Prediction Using Deep Neural Networks with Hardware Acceleration

Jacinto 7 processors: application-specific hardware accelerators

691: A.I. Accelerators: Hardware Specialized for Deep Learning — with Ron Diamant

Introduction to Machine Learning Hardware Accelerators (Digital and In-Memory Computing)

CPU vs GPU: Why GPUs are more suited for Deep Learning? #deeplearning #gpu #cpu

Lecture 13 - Hardware Acceleration Implemention

Deep learning accelerator for ADAS

Hardware Accelerators for Machine Learning Inference

Cecile – Manager, Computer Vision / Machine Learning Hardware Acceleration

Boost AI Performance With CPU Acceleration

Комментарии

0:17:46

0:17:46

0:00:34

0:00:34

0:15:52

0:15:52

0:03:13

0:03:13

0:07:30

0:07:30

0:10:49

0:10:49

0:45:22

0:45:22

0:05:32

0:05:32

0:30:04

0:30:04

![[REFAI Seminar 10/24/22]](https://i.ytimg.com/vi/WzLMsFZXy2k/hqdefault.jpg) 0:49:59

0:49:59

0:00:12

0:00:12

0:28:16

0:28:16

0:13:26

0:13:26

1:02:35

1:02:35

0:14:21

0:14:21

0:07:33

0:07:33

1:32:34

1:32:34

0:07:15

0:07:15

0:07:32

0:07:32

0:50:09

0:50:09

0:00:38

0:00:38

0:32:55

0:32:55

0:00:57

0:00:57

0:00:41

0:00:41