filmov

tv

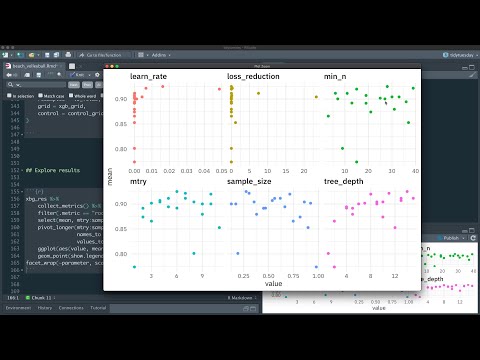

TidyTuesday: Tuning Pre-Processing Parameters with Tidymodels

Показать описание

In this week's #TidyTuesday video, I show how to tune pre-processing methods for regression models using #Tidymodels. I go over common problems with pre-processing such as choosing thresholds and a number of components. I also go over use cases for regression metrics such as RMSE and MAE. I then tune a basic lasso regression model and choose the optimal parameters for a house pricing model.

Intro: 0:00

Data Partitioning: 1:52

Pre-Process Tuning: 2:50

Defining Model: 8:09

Creating Parameter Grids: 9:30

Model Tuning: 13:25

Regression Metric Explanation: 14:35

Model Evaluation: 17:27

Model Finalization: 22:04

#DataScience

PC Setup (Amazon Affiliates)

Intro: 0:00

Data Partitioning: 1:52

Pre-Process Tuning: 2:50

Defining Model: 8:09

Creating Parameter Grids: 9:30

Model Tuning: 13:25

Regression Metric Explanation: 14:35

Model Evaluation: 17:27

Model Finalization: 22:04

#DataScience

PC Setup (Amazon Affiliates)

TidyTuesday: Tuning Pre-Processing Parameters with Tidymodels

TidyTuesday: Improving Model Train Times with TidyModels

TidyTuesday: Model Analysis with Autoplot and TidyModels

parameter tuning via tidymodels

Data preprocessing and resampling using tidymodels

TidyTuesday: Ensembling Tidymodels with Stacks

Boost Model Performance with Hyperparameter Tuning in R | Tidymodels

TidyTuesday: Multiclass Classification using Tidymodels

Max Kuhn | Total Tidy Tuning Techniques | RStudio (2020)

Tuning hyperparameters and stacking models with 'tidymodels' | R Tutorial (2021)

TidyX Episode 85 | Tidymodels - Tuning Workflow Sets

TidyTuesday: Model Evaluation using TidyModels and TidyPosterior

TidyTuesday: Applied Business Analytics with TidyModels

Tuning random forest hyperparameters with tidymodels

TidyTuesday: Classification Model Metrics using Tidymodels and Yardstick

Tune and choose hyperparameters for modeling NCAA women's tournament seeds

TidyTuesday: Transfer Learning with Tfhub

Hyperparameter tuning using tidymodels

TidyX Episode 82 | Tidymodels - Tuning a Random Forest

TidyTuesday: Creating Multilevel Models using TidyModels

Tuning XGBoost using tidymodels

Tidy Modeling with R Book Club Ch12: Model tuning & the dangers of overfitting (2021-03-30) (tmw...

TidyX Episode 86 | Tidymodels - Julia Silge and Tune Racing

Use tidymodels workflowsets to predict human/computer interactions from Star Trek

Комментарии

0:27:14

0:27:14

0:21:33

0:21:33

0:26:57

0:26:57

0:08:40

0:08:40

0:47:44

0:47:44

0:39:07

0:39:07

0:15:03

0:15:03

0:29:43

0:29:43

0:23:20

0:23:20

0:20:52

0:20:52

0:37:13

0:37:13

0:30:54

0:30:54

0:36:46

0:36:46

1:04:32

1:04:32

0:35:05

0:35:05

0:30:42

0:30:42

0:27:44

0:27:44

0:30:10

0:30:10

0:28:13

0:28:13

0:31:54

0:31:54

0:50:36

0:50:36

0:34:00

0:34:00

1:00:18

1:00:18

0:31:47

0:31:47