filmov

tv

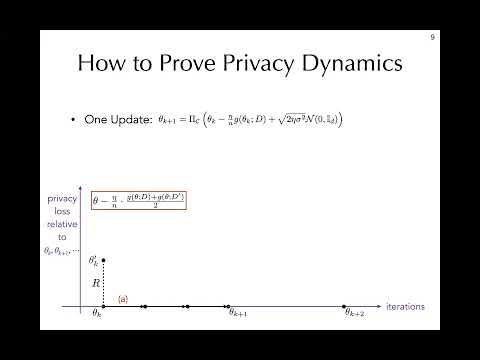

Lecture 14B: Modern Private ML - Differentially Private Stochastic Gradient Descent

Показать описание

Lecture 14B: Modern Private ML - Differentially Private Stochastic Gradient Descent

Lecture 14A: Modern Private ML - Some Background on Neural Networks

Lecture 14C: Modern Private ML - PATE

Lecture 14B: Explaining Decisions (PI Explanations, Sufficient & Complete Reasons)

Concentrated Differentially Private Gradient Descent with Adaptive per-Iteration Privacy Budget

Antti Honkela: Accurate privacy accounting for differentially private machine learning

430 - Adaptive Privacy Preserving Deep Learning Algorithms for Medical Data

Lecture 13C: Differentially Private Machine Learning - Gradient Perturbation

Fast and Memory Efficient Differentially Private-SGD via JL Projections

Privacy, Stability, and Online Learning

Interpretation of the ε and δ s of Differential Privacy | Lê Nguyên Hoang

Differential privacy dynamics of noisy gradient descent

Research talk: Differentially private fine-tuning of large language models

DP-SGD Privacy Analysis is Tight!

The Mathematics Behind Differential Privacy

Differential Privacy from a Statistical Perspective–Obtaining Valid Inferences...

Session 3C - Private Stochastic Convex Optimization: Optimal Rates in Linear Time

Private-kNN: Practical Differential Privacy for Computer Vision

Differentially Private Model Publishing For Deep Learning

KDD 2020: Lecture Style Tutorials: Edge AI Systems Design and ML for IoT Data Analytics

The Formal Definition of Differential Privacy | Lê Nguyên Hoang

Mathematics for ML: Lecture 4

OSDI '20 - Fast RDMA-based Ordered Key-Value Store using Remote Learned Cache

Genius! Can you answer this?🤔 | Maths Challenge | CBSE Class 7 | Anushya Mam #shorts #ytshorts

Комментарии

1:00:20

1:00:20

0:21:25

0:21:25

0:30:09

0:30:09

0:47:17

0:47:17

0:02:56

0:02:56

0:44:15

0:44:15

0:04:52

0:04:52

0:37:49

0:37:49

0:53:48

0:53:48

0:36:49

0:36:49

0:03:02

0:03:02

0:10:21

0:10:21

0:36:05

0:36:05

0:48:20

0:48:20

0:06:13

0:06:13

0:47:25

0:47:25

0:24:28

0:24:28

0:01:00

0:01:00

0:23:13

0:23:13

3:58:03

3:58:03

0:05:16

0:05:16

1:09:11

1:09:11

0:18:58

0:18:58

0:00:59

0:00:59