filmov

tv

Normalization Constant for the Normal/Gaussian | Full Derivation with visualizations

Показать описание

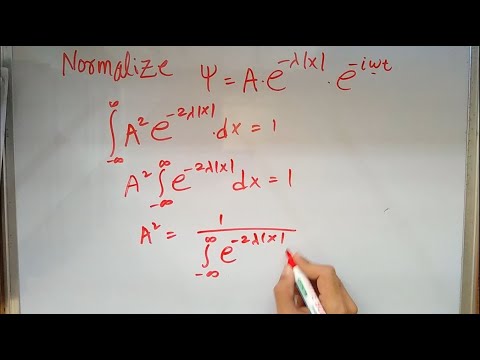

The bell-shape curve of the Normal/Gaussian distributions is created by the exponential of a negative parabola. But just using this expression as a probability density function would be invalid because the integral under the curve would not be 1. Hence, it requires a division by a normalization constant. In this video, we are going to derive this constant. For this, we will also figure out why there is a pi inside it.

-------

-------

Timestamps:

00:00 Introduction

00:16 Why we need the normalization?

01:23 Defining and simplifying the integral

02:30 No antiderivative? - Trick

03:38 2D Representation

06:46 Rotational Symmetry

07:04 Changing to Polar Coordinates

08:54 Finding the antiderivative

10:23 Finishing the integration

11:33 Outro

Normalization Constant for the Normal/Gaussian | Full Derivation with visualizations

Math341 Fa2013 Lecture19part2: Gaussian Density (Normalization Constant, Moments, Sums)

The Bell Curve (Normal/Gaussian Distribution) Explained in One Minute: From Definition to Examples

This is why we have σ√(2π) as normalization factor in Gaussian Distribution Function.

5. Gaussian | Learn Quantum Physics

Multivariate normal distribution is normalized: A proof.

The Gaussian Distribution

Why π is in the normal distribution (beyond integral tricks)

Multivariate Normal (Gaussian) Distribution Explained

How to normalize data in Excel

Normal Distribution EXPLAINED with Examples

Deriving the Normalization Constant for the Beta Distribution

STATISTICS- Gaussian/ Normal Distribution

Gaussian Distribution Mathematical Physics #csirnet #physics

EX: Normalise a Gaussian

Introduction to the Normal/Gaussian Distribution | with example in TensorFlow Probability

Some useful probability distributions

Example for NORMALIZATION and EXPECTATION VALUE - Quantum Mechanics 3.1

Using Gaussian Integral to Normalize Ground State Wave Function of the Quantum Harmonic Oscillator

10 The Multivariate Gaussian Distribution

The Normal Approximation to the Binomial Distribution

Univariate and bivariate normal distributions

Normalisation by Hand ✍️🏽 & Software 💻 | Quantum Harmonic Oscillator

This isn't a Circle - Why is Pi here?

Комментарии

0:11:59

0:11:59

0:08:29

0:08:29

0:01:04

0:01:04

0:00:34

0:00:34

0:10:49

0:10:49

0:17:21

0:17:21

0:10:13

0:10:13

0:24:46

0:24:46

0:07:08

0:07:08

0:04:39

0:04:39

0:10:59

0:10:59

0:13:14

0:13:14

0:05:00

0:05:00

0:00:26

0:00:26

0:04:20

0:04:20

0:09:54

0:09:54

0:05:31

0:05:31

0:11:19

0:11:19

0:23:43

0:23:43

0:05:51

0:05:51

0:14:10

0:14:10

0:24:18

0:24:18

0:06:04

0:06:04

0:10:30

0:10:30