filmov

tv

Accuracy and Confusion Matrix | Type 1 and Type 2 Errors | Classification Metrics Part 1

Показать описание

In this video. we'll explore accuracy and the confusion matrix, unraveling the concepts of Type 1 and Type 2 errors. Join us on this journey to understand how these metrics play a crucial role in evaluating the performance of classification models.

============================

Do you want to learn from me?

============================

📱 Grow with us:

⌚Time Stamps⌚

00:00 - Intro

00:46 - Accuracy

06:25 - Code Example using SKL

08:35 - Accuracy of multi-classification problem

10:18 - How much accuracy is good?

13:16 - The problem with Accuracy Score

15:30 - Confusion Matrix

23:15 - Type 1 Error

25:53 - Confusion Matrix for Multi Classification Problem

30:03 - When is accuracy misleading?

============================

Do you want to learn from me?

============================

📱 Grow with us:

⌚Time Stamps⌚

00:00 - Intro

00:46 - Accuracy

06:25 - Code Example using SKL

08:35 - Accuracy of multi-classification problem

10:18 - How much accuracy is good?

13:16 - The problem with Accuracy Score

15:30 - Confusion Matrix

23:15 - Type 1 Error

25:53 - Confusion Matrix for Multi Classification Problem

30:03 - When is accuracy misleading?

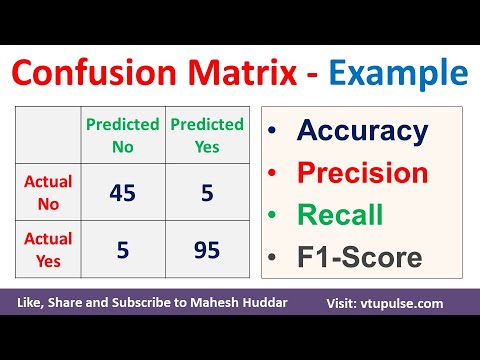

Confusion Matrix Solved Example Accuracy Precision Recall F1 Score Prevalence by Mahesh Huddar

Machine Learning Fundamentals: The Confusion Matrix

The Confusion Matrix : Data Science Basics

Precision, Recall, F1 score, True Positive|Deep Learning Tutorial 19 (Tensorflow2.0, Keras & Pyt...

Confusion Matrix ll Accuracy,Error Rate,Precision,Recall Explained with Solved Example in Hindi

Confusion Matrix for Multiclass Classification Precision Recall Weighted F1 Score by Mahesh Huddar

Confusion Matrix and Accuracy Ratios

Machine Learning Fundamentals: Sensitivity and Specificity

Machine Learning 31 - Evaluating the Performance of Classification Models

Confusion Matrix Explained

Confusion Matrix - Model Building and Validation

Accuracy and Confusion Matrix | Type 1 and Type 2 Errors | Classification Metrics Part 1

Tutorial 5 - Model Performance Confusion Matrix Accuracy Prediction

Accuracy, F1 Score, Confusion Matrix | Machine Learning with Scikit-Learn Python

Confusion Matrix TensorFlow | Confusion Matrix Explained With Example | 2023 | Simplilearn

Confusion Matrix | How to evaluate classification model | Machine Learning Basics

Accuracy Assessment of a Land Use and Land Cover Map

Confusion Matrix in Machine Learning ⚡️ Explained in 60 Seconds

Multi-Class confusion matrix. solved with Example

Confusion Matrix. Accuracy. Error rate. Recall. Precision - Machine Learning by #Moein

Tutorial 34- Performance Metrics For Classification Problem In Machine Learning- Part1

Confusion Matrix application in Machine Learning

Accuracy, Confusion Matrix, Precision, Recall and F1-Score All in One With Example | Data Science

Understanding Confusion Matrix Part 1

Комментарии

0:05:50

0:05:50

0:07:13

0:07:13

0:09:55

0:09:55

0:11:46

0:11:46

0:08:22

0:08:22

0:08:22

0:08:22

0:05:10

0:05:10

0:11:47

0:11:47

0:41:11

0:41:11

0:09:33

0:09:33

0:01:32

0:01:32

0:34:08

0:34:08

0:00:37

0:00:37

0:13:17

0:13:17

0:24:47

0:24:47

0:05:09

0:05:09

0:10:06

0:10:06

0:00:59

0:00:59

0:06:32

0:06:32

0:07:03

0:07:03

0:24:12

0:24:12

0:00:31

0:00:31

0:17:15

0:17:15

0:00:59

0:00:59