filmov

tv

M3 max 128GB for AI running Llama2 7b 13b and 70b

Показать описание

In this video we run Llama models using the new M3 max with 128GB and we compare it with a M1 pro and RTX 4090 to see the real world performance of this Chip for AI.

M3 max 128GB for AI running Llama2 7b 13b and 70b

Cheap vs Expensive MacBook Machine Learning | M3 Max

M3 Max MacBook Pro with 128GB of RAM - who ACTUALLY needs this?!

RTX4090 vs M3 Max - machine learning - which is faster?

Apple M3 Max MLX beats RTX4090m

M3 Max Benchmarks with Stable Diffusion, LLMs, and 3D Rendering

Apple M3 Machine Learning Bargain

Maxed Out Macbook M3 Max for Stable Diffusion: A Powerhouse or a Costly Mistake?

Zero to Hero LLMs with M3 Max BEAST

Comparing The M3 Pro and M3 Max in AI, Gaming, and 3D workloads.

TFLOPS! RTX4090 vs M3 Max

Space Black M3 Max MacBook Pro Review: We Can Game Now?!

M3 MAX MacBook Pro 3 Months later… MAXED OUT! (but was it worth it?)

The MacBook Pro 16 M4 MAX Review - Who Needs This!? 🔥

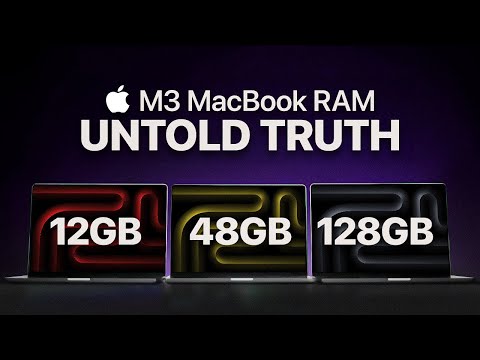

How much RAM do you ACTUALLY need in your M3 Macbook? [2024]

Apple M3 Max MacBook Pro - A Long Term User Review

MLX Mixtral 8x7b on M3 max 128GB | Better than chatgpt?

Maxed Out M4 Max MacBook Pro Unboxing, Test and Comparisons | Nano Texture Comparison

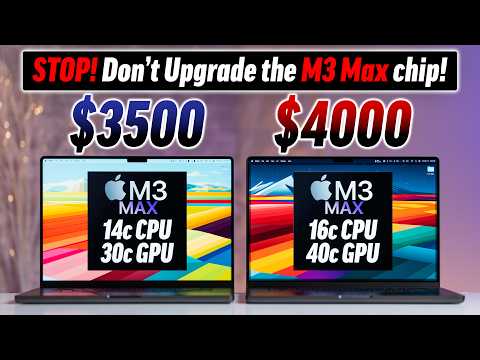

Base vs Top M3 Max MacBook Pro - ULTIMATE Comparison!

M3 MacBook Pro — How much RAM do you ACTUALLY need?

Test MacBook Pro 16' M3 Max - Impressionnant !

MacBook Pro M4 Max FULL Review - INSANE Performance!

APPLE DISTRUGGE I PC? Nuovo MacBook M3 Max

I ordered the MAXED out M3 Max Macbook Pro (and why you probably shouldn’t)!

Комментарии

0:08:53

0:08:53

0:11:06

0:11:06

0:02:36

0:02:36

0:00:53

0:00:53

0:10:24

0:10:24

0:08:24

0:08:24

0:01:00

0:01:00

0:21:55

0:21:55

0:17:00

0:17:00

0:08:24

0:08:24

0:00:46

0:00:46

0:08:56

0:08:56

0:09:23

0:09:23

0:16:20

0:16:20

0:11:17

0:11:17

0:12:46

0:12:46

0:07:43

0:07:43

0:08:10

0:08:10

0:13:57

0:13:57

0:10:29

0:10:29

0:09:49

0:09:49

0:09:57

0:09:57

0:14:37

0:14:37

0:11:00

0:11:00