filmov

tv

Why Apple Uses Google TPUs While the World Relies on NVIDIA Chips for AI Training

Показать описание

While the world relies on NVIDIA chips for AI model training, Apple is breaking the mold by choosing Google's Tensor Processing Units (TPUs) instead. This bold move sees Apple renting server space from cloud providers rather than building its own data centers, potentially revolutionising cost and efficiency in AI processing. Could this decision challenge NVIDIA's dominance in the AI chip market? Watch this video to know more about Apple's innovative strategy and its implications for the future of AI!

#google #apple #nvidia #ai #artificialintelligence

Mint is an Indian financial daily newspaper published by HT Media. The Mint YT Channel brings you cutting edge analysis of the latest business news and financial news. With in-depth market coverage, explainers and expert opinions, we break down and simplify business news for you.

Click here to download the Mint App

#google #apple #nvidia #ai #artificialintelligence

Mint is an Indian financial daily newspaper published by HT Media. The Mint YT Channel brings you cutting edge analysis of the latest business news and financial news. With in-depth market coverage, explainers and expert opinions, we break down and simplify business news for you.

Click here to download the Mint App

Why Apple Uses Google TPUs While the World Relies on NVIDIA Chips for AI Training

Nvidia GPUs vs. Google TPUs | Sharp Tech with Ben Thompson

Apple Chooses Google TPUs Over Nvidia for its AI Infrastructure

Google TPU & other in-house AI Chips

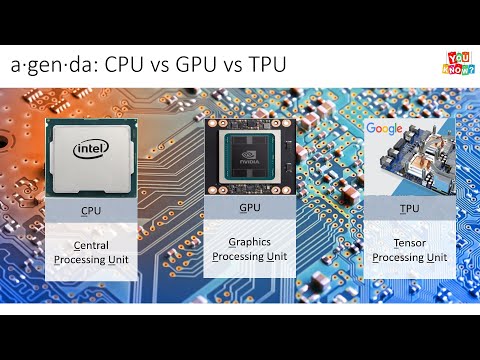

CPU vs GPU vs TPU explained visually

CPU vs GPU vs TPU vs DPU vs QPU

Tesla Dojo, TPU, NVIDIA & hardware optimized for machine learning | George Hotz and Lex Fridman

Tensor Processing Units (TPUs) (13.4)

Cloud TPU v4: Fast, flexible, and easy-to-use ML accelerators

What is CPU,GPU and TPU? Understanding these 3 processing units using Artificial Neural Networks.

Astuces WhatsApp - Verrouiller l’accès à WhatsApp à tous #shorts

Google® TPU Dwell Time Demo built with i.MX 8M Applications Processor

How Amazon, Apple, Facebook and Google manipulate our emotions | Scott Galloway

A few things to know from Google I/O 2021 in under 9 minutes.

Accelerate AI inference workloads with Google Cloud TPUs and GPUs

nVidia's DGX Supercomputer will DESTROY Google TPUs in AI

Google Assistant 6 tpu 3.0 ( 6 New Voices) are Introduced

CPU vs GPU vs DPU vs TPU vs QPU Differences | A Quantumfy Review

New Apple Wallet vs. New Google Wallet: (Watch the Reveals)

Apple Pay vs. Samsung Pay vs. Google Pay: Which is best?

What Is Tensor Processing Unit (TPU) Hindi/Urdu

GPU vs TPU vs NPU — How do these different computer chips affect Tesla’s FSD and AI training?

CPU vs GPU vs TPU vs NPU

YZ Haberleri: OpenAI Ortaklıkları, Huggingface'e Google TPU'ları, Meta MobileLLM, Google M...

Комментарии

0:02:07

0:02:07

0:09:10

0:09:10

0:01:25

0:01:25

0:12:06

0:12:06

0:03:50

0:03:50

0:08:25

0:08:25

0:06:52

0:06:52

0:07:54

0:07:54

0:06:07

0:06:07

0:10:24

0:10:24

0:00:23

0:00:23

0:01:35

0:01:35

0:19:06

0:19:06

0:08:40

0:08:40

0:37:11

0:37:11

0:09:56

0:09:56

0:00:38

0:00:38

0:13:31

0:13:31

0:05:14

0:05:14

0:08:32

0:08:32

0:04:18

0:04:18

0:13:48

0:13:48

0:00:50

0:00:50

0:17:14

0:17:14